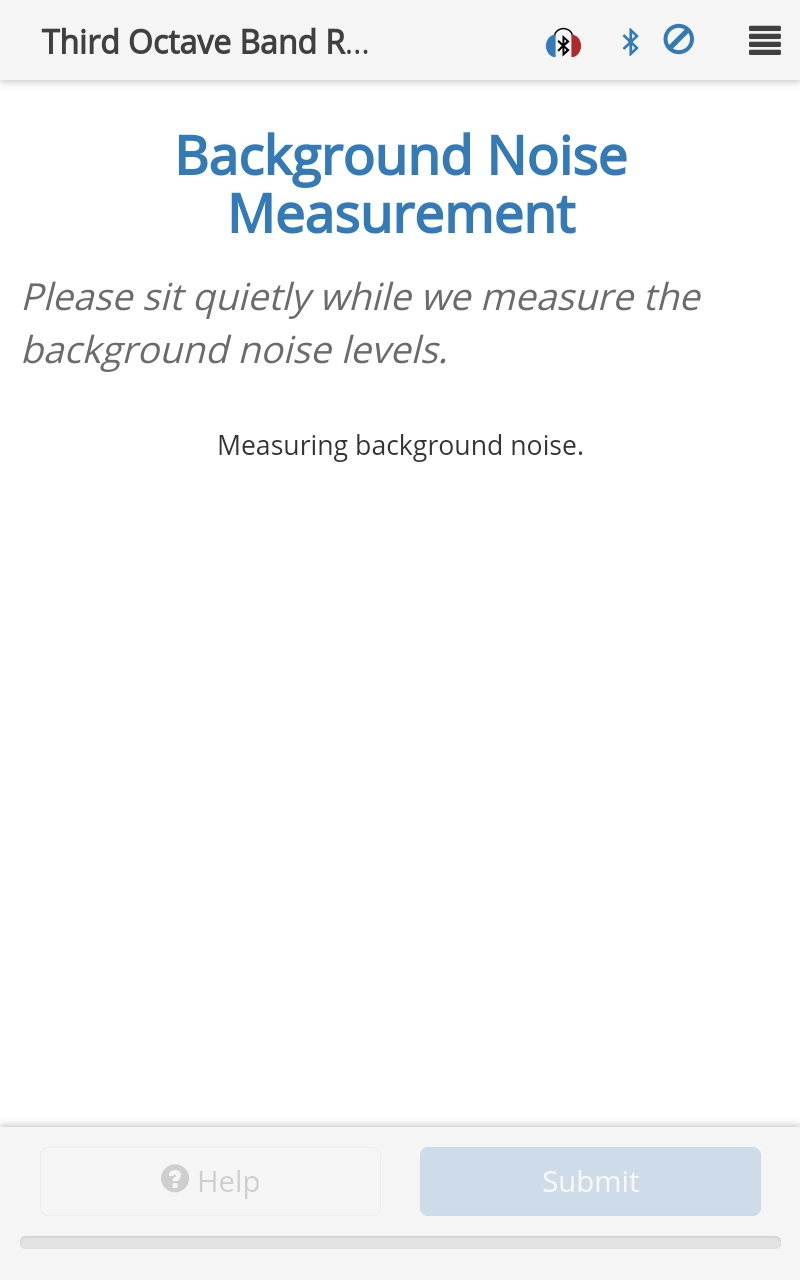

WAHTS Response Areas

TabSINT comes with many different types of WAHTS-specific response areas that can be used within WAHTS protocols. Select one of the following response areas for a protocol example and image of each response area type. Much like the regular response areas, there are a number of WAHTS response area definitions that, if referenced, may be used within any WAHTS response area.

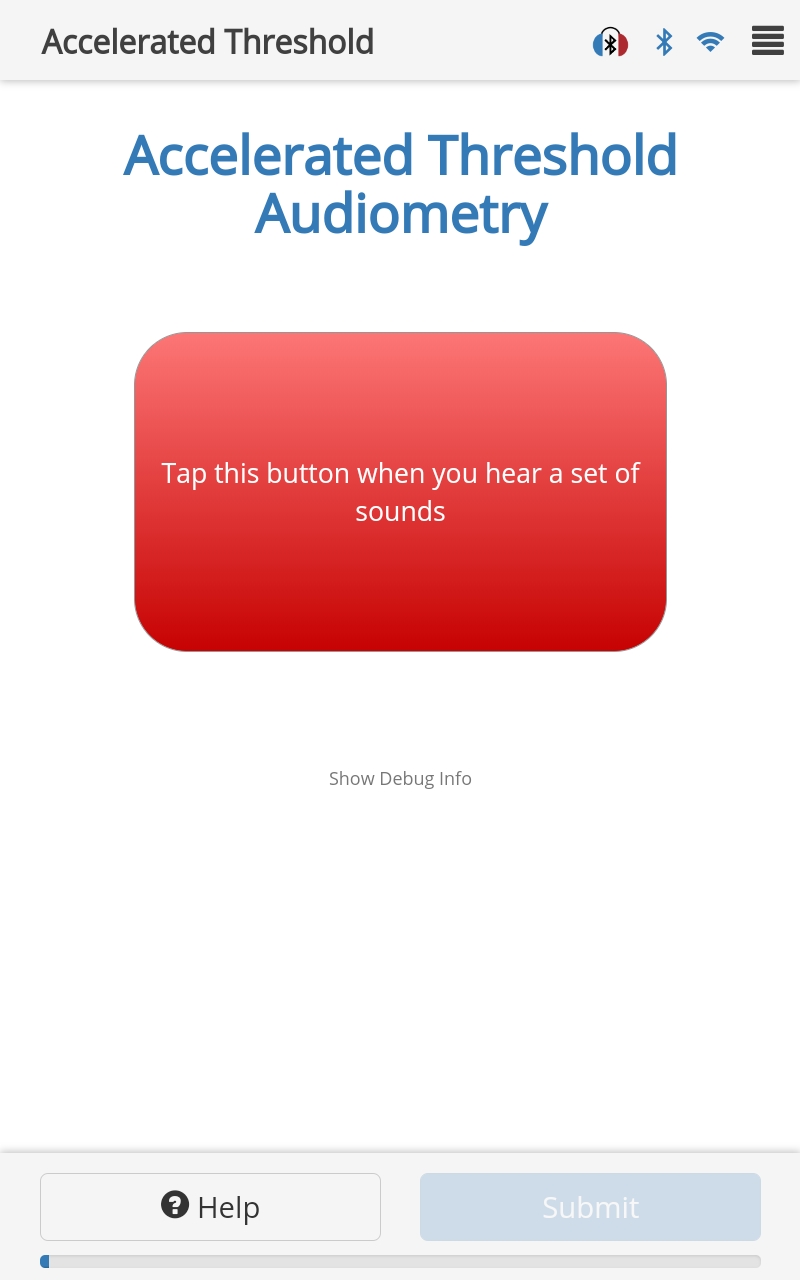

Accelerated Threshold Response Area

A response area for performing an Accelerated Threshold exam, which measures hearing thresholds using a learning algorithm to achieve rapid convergence. The current accelerated threshold algorithm is inspired by support-vector machines (SVM).

Protocol Example

{

"id": "Accelerated Threshold",

"title": "Accelerated Threshold",

"questionMainText": "Accelerated Threshold Audiometry",

"responseArea": {

"type": "chaAcceleratedThreshold",

"autoSubmit": true,

"examInstructions" : "Tap the button once for each sound you hear.",

"examProperties": {

"UseSoftwareButton": true

}

}

}

Options

Audiometry Page Properties may be defined on the PAGE, not within the

responseArea.examProperties:Type:

objectDescription: Properties defining the exam, including:

SVM_C:- Type:

integer - Description:

Cparameter (weighting for loss function) for the SVM algorithm. (Default = 100)

- Type:

SVM_D:- Type:

number - Description:

dparameter (margin offset) for the SVM algorithm. (Default = 0.01)

- Type:

SVM_M:- Type:

integer - Description:

mparameter (margin weighting) for the SVM algorithm. (Default = 10)

- Type:

SVM_MaxJump:- Type:

integer - Description: Maximum size of any change in level the algorithm may make. (Default = 20)

- Type:

SVM_StagDist:- Type:

integer - description: Stagnation distance criterion. Changes within this distance lead to the satisfaction of the stagnation end condition. (Default = 5)

- Type:

SVM_N_StagSteps:- Type:

integer - Description: Number of presentations to consider to evaluate stagnation. (Default = 3)

- Type:

SVM_MinStep:- Type:

integer - Description: Minimum number of presentations before the exam can end. (Default = 5)

- Type:

Response

The result.response is a number corresponding to the threshold level in LevelUnits. The result object also contains the Common Audiometry Responses and:

result.L = [30,15, ...] // Array of levels presented

result.RetSPL = 15 // Reference Equivalent Threshold Sound Pressure Level (RetSPL) at the test frequency

result.FalsePositive = [0,0, ...] // Array of numbers indicating the number of responses to each presentation that occurred outside the polling time window (may be 0, 1, 2 or 3 where 3 indicates 3+)

result.NumCorrectResp = 0 // Number of presentations correctly answered (only used when Screener = true)

result.ResponseTime = [859,489, ...] // Array of numbers indicating the response time (ms) to each presentation (no response recorded as 0)

Schema

Audiometry List Response Area

This response area is deprecated as of TabSINT version 4.4.0.

This response area allows the user to run many audiometry exams from a single protocol page.

Protocol Example

{

"id": "AudiometryList",

"title": "Test List",

"questionMainText": "Hughson-Westlake Audiometry",

"helpText": "Follow instructions",

"instructionText": "This test measures your hearing sensitivity. You will hear sounds at different pitches one ear at a time. Your task is to tap the button when you hear a sound, no matter how soft the sound may be.",

"responseArea": {

"type": "chaAudiometryList",

"repeatGroup": true,

"randomizeList": true,

"notesOnGroupFailedTwice": true,

"presentationList": [

{"F": 500, "OutputChannel": "HPL0"},

{"F": 1000, "OutputChannel": "HPL0"},

{"F": 2000, "OutputChannel": "HPL0"},

{"F": 500, "OutputChannel": "HPR0"},

{"F": 1000, "OutputChannel": "HPR0"},

{"F": 2000, "OutputChannel": "HPR0"}

],

"commonExamProperties": {

"Lstart": 30,

"UseSoftwareButton": true,

"LevelUnits": "dB HL"

},

"commonResponseAreaProperties": {

"pause": true

}

}

}

Options

examInstructions:- Type:

string - Description: Replaces the top-level instruction text on the CHA exam pages (each page after starting page).

- Type:

audiometryType:- Type:

string - Description: Type of audiometry exam to run, available options are

HughsonWestlake. (Default =HughsonWestlake)

- Type:

repeatGroup:- Type:

boolean - Description: If

true, if any tests in a group fail to converge the first time, at the end of the group ask the listener to focus and repeat only the failed tests.

- Type:

notesOnGroupFailedTwice:- Type:

boolean - Description: If

true, if any tests fail the second time, showPlease hand tablet to test administratorat the end of the group and then prompt the administrator to enter notes.

- Type:

randomizeList:- Type:

boolean - Description: If

true, the response area will shuffle the presentation list into a random order. (Default =false)

- Type:

presentationList:Type:

arrayDescription: Array of audiometry exams to run. Any properties defined here will supersede

commonExamProperties.id:- Type:

string - Description: Custom

presentationIdto use for the result for each frequency. (Default =parent id _ Frequency)

- Type:

commonExamProperties:Type:

objectDescription: Object containing any of:

commonResponseAreaProperties:Type:

objectDescription: Object containing any of:

measureBackground:- Type:

string - Description: Method to use to measure background noise after an audiometry exam. Can be

ThirdOctaveBands.

- Type:

Response

The chaAudiometryList creates a result object for each presentation in the presentationList, where the presentationId is the id if specified in the protocol.

For each presentation, the result.response is the threshold value (if the exam converged) or Failed to Converge (if the exam failed to converge). Each result object contains Common Audiometry Responses as well as:

result.L = [30,15, ...] // Array of numbers indicating the levels presented

result.RetSPL = 15 // Reference Equivalent Threshold Sound Pressure Level (RetSPL) at the test frequency

result.FalsePositive =[0,0, ...] // // Array (of length equal to length of L) indicating the number of responses to the corresponding presentation that occurred outside the polling time window (3 indicates 3 or more)

result.NumCorrectResponse = 0 // // Number of presentations correctly answered (only used when Screener = true)

result.ResponseTime = [874,578, ...] // Array of numbers recording the response time in ms to each presentation (0 if no response)

Schema

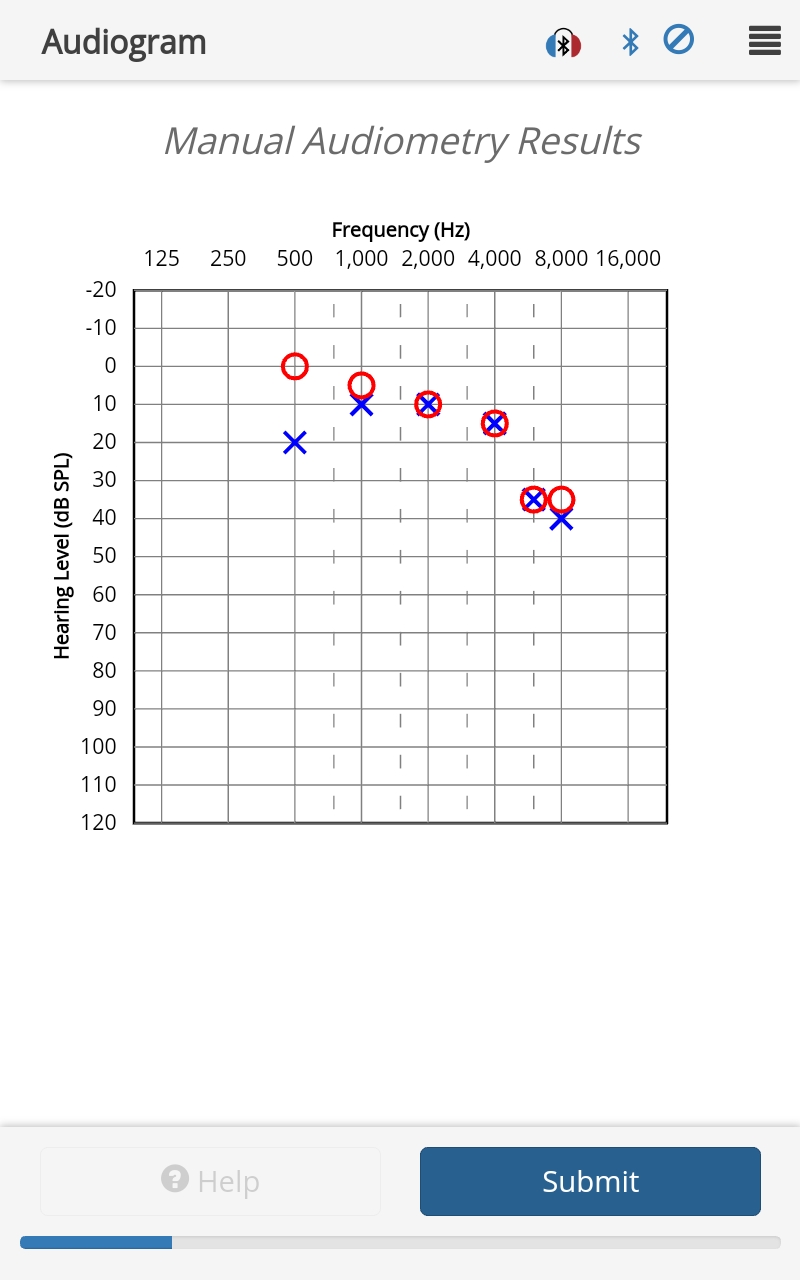

Audiometry Results Plot Response Area

Use this page after an audiometry exam page to display an audiogram of the results. The example given here was used in conjunction with the example given for the Manual Audiometry Response Area.

Protocol Example

{

"id": "ManualAudiometryPlot",

"title": "Manual Audiometry",

"questionSubText": "Manual Audiometry Results",

"responseArea": {

"type": "chaAudiometryResultsPlot",

"displayIds": ["ManualAudiometry"]

}

}

Options

displayIds:- Type:

array - Description: An array of strings indicating the page

ids of which to plot the results, i.e.["training"]or["section1_left", "section1_right"]

- Type:

Response

The result.response from this response area contains no meaningful data. The result object only contains the Common TabSINT Responses. Of interest to the user is the audiogram generated using the data collected in a preceding audiometry pages.

Schema

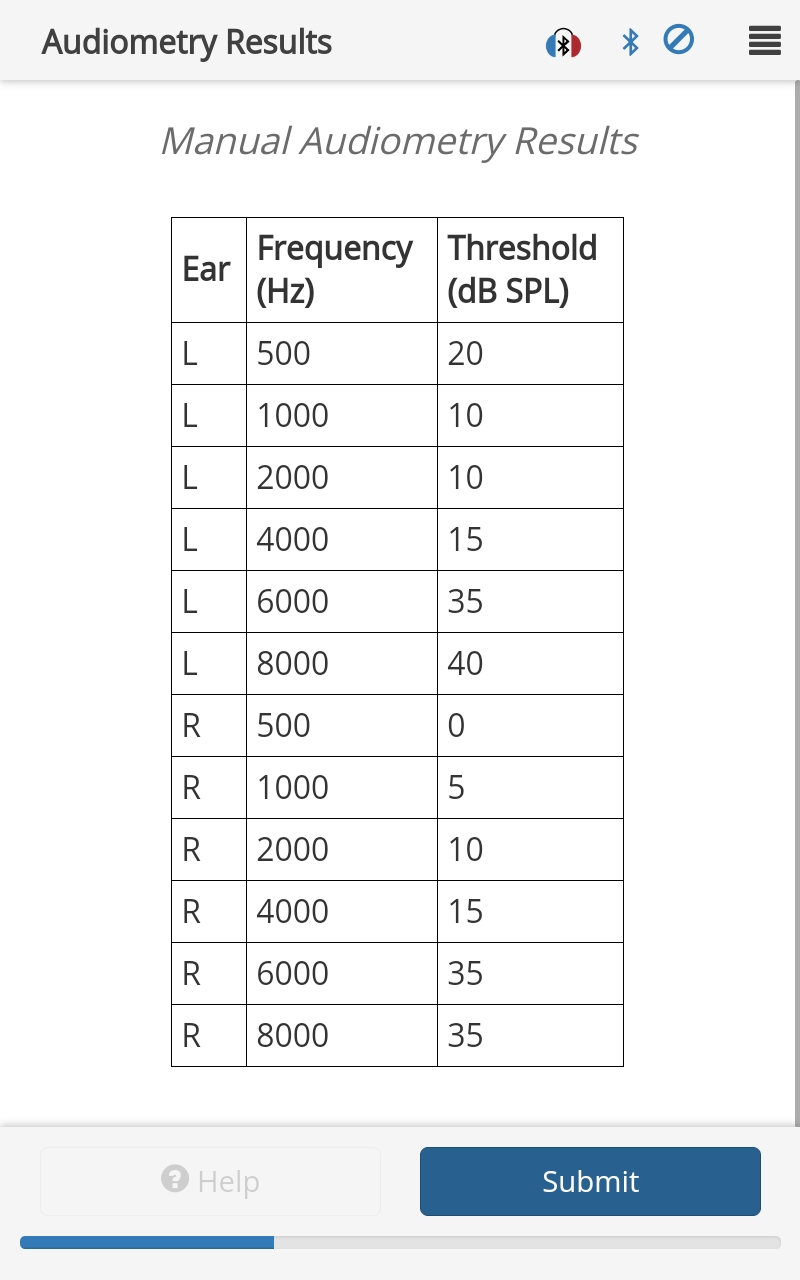

Audiometry Results Table Response Area

Use this page after an audiometry exam page to display a table of the results. The example given here was used in conjunction with the example given for the Manual Audiometry Response Area.

Protocol Example

{

"id": "ManualAudiometryTable",

"title": "Manual Audiometry",

"questionSubText": "Manual Audiometry Results",

"responseArea": {

"type": "chaAudiometryResultsTable",

"displayIds": ["ManualAudiometry"]

}

}

Options

displayIds:- Type:

array - Description: An array of strings indicating the page

ids of which to plot the results, i.e.["training"]or["section1_left", "section1_right"]

- Type:

showSLMNoise:- Type:

boolean - Description: Display the background noise measured from the SLM probe in the table. (Default =

false)

- Type:

showSvantek:- Type:

boolean - Description: Display the background noise measured from the dosimeter in the table. (Default =

false)

- Type:

Response

The result.response from this response area contains no meaningful data. The result object only contains the Common TabSINT Responses. Of interest to the user is the table generated using the data collected in a preceding audiometry pages.

Schema

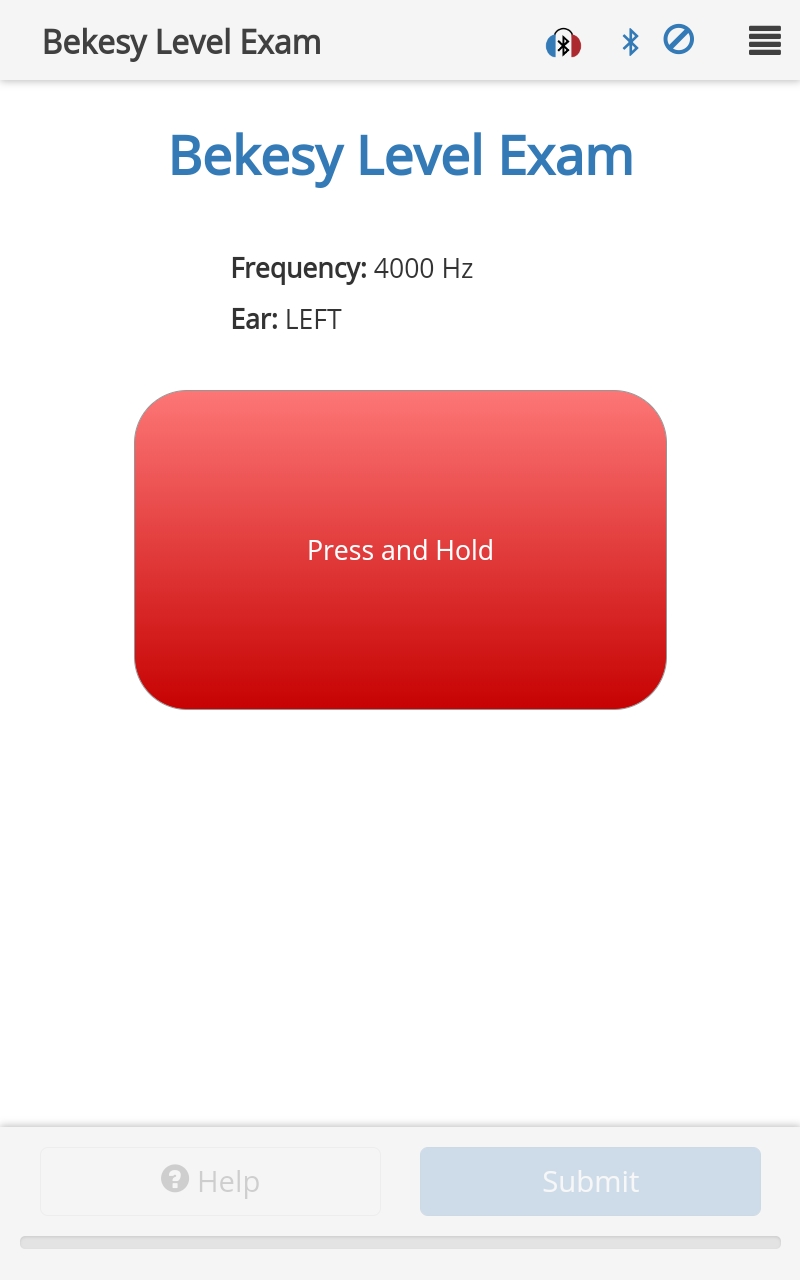

Bekesy Like Response Area

Run a Bekesy-Like level threshold exam.

Protocol Example

{

"id": "BekesyLike",

"title": "Bekesy Level Exam",

"questionMainText": "Bekesy Level Exam",

"instructionText": "Press and hold the button only when you hear the tones.",

"responseArea": {

"type": "chaBekesyLike",

"examInstructions": "Press and hold the button only when you hear the tones.",

"examProperties": {

"F": 4000,

"Lstart": 50,

"PresentationMax": 100,

"UseSoftwareButton": true,

"LevelUnits": "dB SPL",

"OutputChannel": "HPL0"

}

}

}

Options

Audiometry Page Properties may be defined on the PAGE, not within the

responseArea.examProperties:Type:

objectDescription: May contain any of the properties:

exportToCSV:- Type:

boolean - Description: If

true, export the result to CSV upon submitting exam results. (Default =false)

- Type:

Response

For a chaBekesyLike response area, result.response is a number corresponding to the threshold level in LevelUnits. The result object also contains:

result.Threshold = 5 // Threshold (LevelUnits)

result.Units = "dB SPL" // Same as LevelUnits defined in the protocol

result.L = [50, 54, ...] // Array of numbers indicating the levels (in LevelUnits) presented during the exam

result.MaximumExcursion = 14 // Maximum difference (dB) between consecutive user responses that occurred during ReversalKeep period

result.RetSPL = 10 // Reference Equivalent Threshold Sound Pressure Level (RetSPL) at the test frequency

result.Slope - -0.061290324 // Slope of L in dB per presentation over the ReversalKeep period

Note that the Common Audiometry Responses will also be provided.

Schema

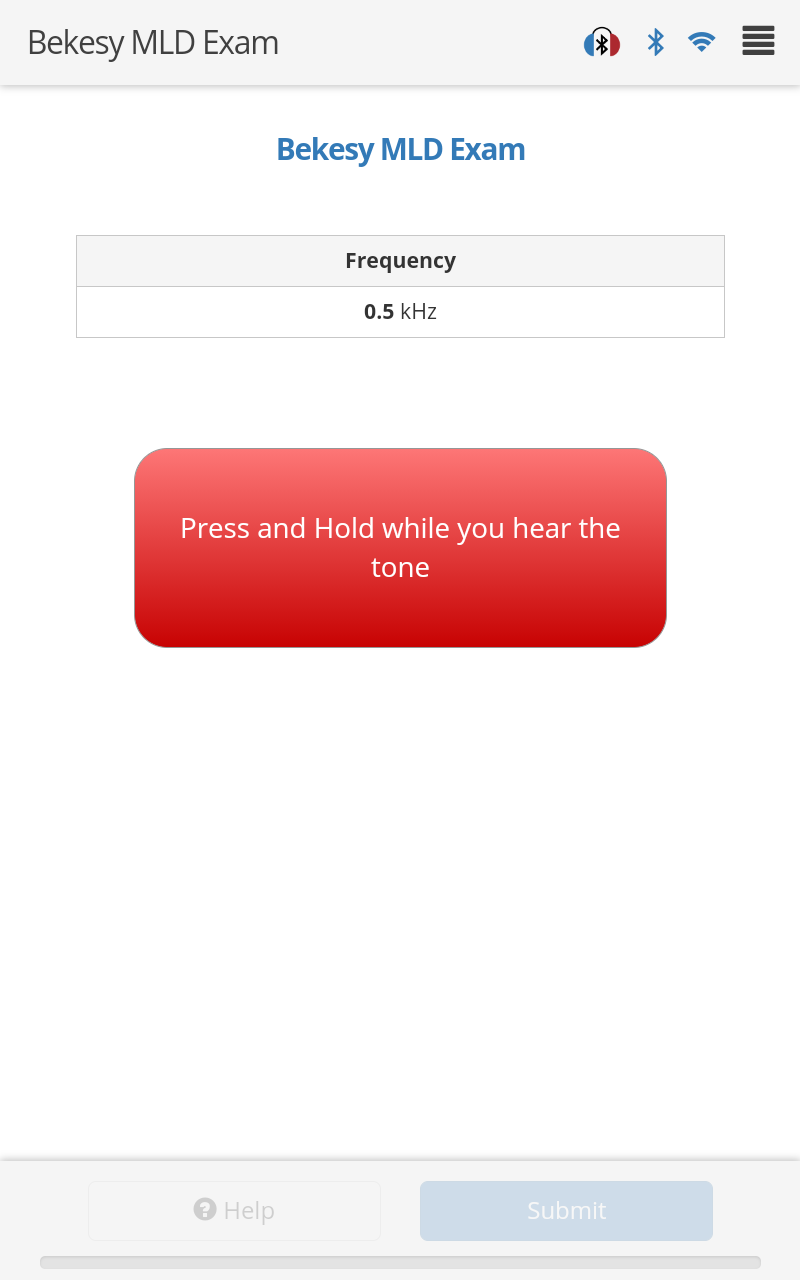

Bekesy MLD Response Area

Run a Bekesy Masking Level Difference (MLD) exam.

Protocol Example

{

"id": "BekesyMLD",

"title": "Bekesy MLD Exam",

"questionMainText": "Bekesy MLD Exam",

"instructionText": "Press and hold the button only when you hear the tones.",

"responseArea": {

"type": "chaBekesyMLD",

"examInstructions": "Press and hold the button only when you hear the tones.",

"examProperties": {

"UseSoftwareButton": true,

"F": 500,

"Lstart": 70,

"LowCutoff": 354,

"HighCutoff": 707,

"PresentationMax": 200,

"IncrementStart": 1,

"MaskerEar": 2,

"MaskerPhase": 0,

"TargetEar": 2,

"TargetPhase": 0,

"InitialSNR": 0

}

}

}

Options

Audiometry Page Properties may be defined on the PAGE, not within the

responseArea.examProperties:Type:

objectDescription: May contain any of the properties:

MaskerEar:- Type:

number - Description: Channel to be used for the masker noise, where 0 = Left, 1 = Right, 2 = Both. (Default = 2)

- Type:

MaskerPhase:- Type:

enum - Description: Phase of the masking material delivered to the right channel (used only if

MaskerEar= 2). Can be 0 or 180. If 0, deliver the exact same noise to both channels. If 180, invert it at the right ear. (Default = 0)

- Type:

LowCutoff:- Type:

number - Description: Low cutoff frequency (Hz) to filter the masker noise. (Default = 500)

- Type:

HighCutoff:- Type:

number - Description: High cutoff frequency (Hz) to filter the masker noise. (Default = 2000)

- Type:

TargetEar:- Type:

number - Description: Channel to be used for the target, where 0 = Left, 1 = Right, 2 = Both. (Default = 2)

- Type:

TargetPhase:- Type:

enum - Description: Phase of the target material delivered to the right channel (used only if

TargetEar= 2). Can be 0 or 180. If 0, deliver the exact same target to both channels. If 180, invert it at the right ear. (Default = 0)

- Type:

InitialSNR:- Type:

number - Description: Initial SNR (dB). The masker level is set as

Lstart - InitialSNR. (Default = 5, Minimum = -15, Maximum = 10)

- Type:

exportToCSV:- Type:

boolean - Description: If

true, export the result to CSV upon submitting exam results. (Default =false)

- Type:

Response

For a chaBekesyMLD response area, result.response is a number corresponding to the threshold level in LevelUnits. The result object also contains:

result.Threshold = 5 // Threshold (LevelUnits)

result.Units = "dB HL" // Same as LevelUnits defined in the protocol

result.L = [70, 68, ...] // Array of numbers indicating the levels (in LevelUnits) presented during the exam

result.MaximumExcursion = 14 // Maximum difference (dB) between consecutive user responses that occurred during ReversalKeep period

result.RetSPL = 10 // Reference Equivalent Threshold Sound Pressure Level (RetSPL) at the test frequency

result.Slope - -0.061290324 // Slope of L in dB per presentation over the ReversalKeep period

Note that the Common Audiometry Responses will also be provided.

Schema

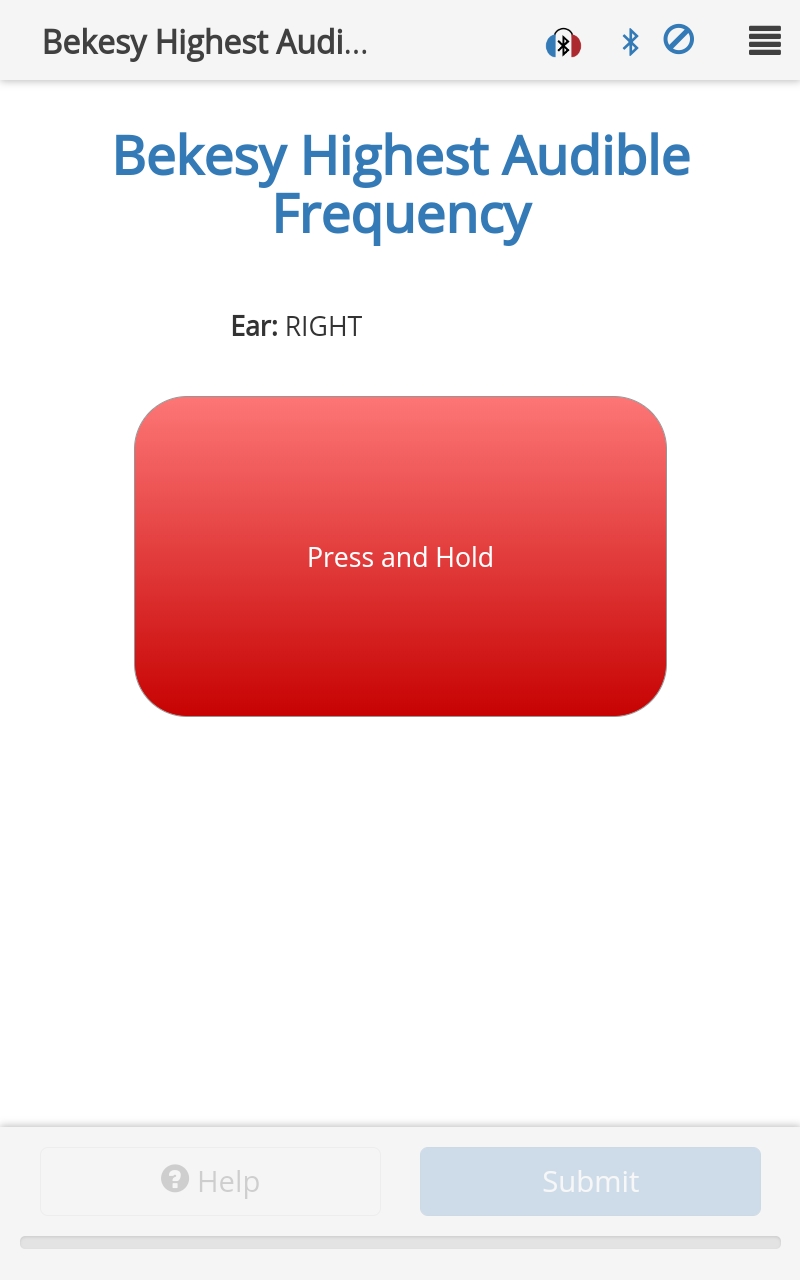

BHAFT Response Area

This response area presents a Bekesy Highest Audible Frequency (BHAFT) exam.

Protocol Example

{

"id": "BHAFT",

"title": "Bekesy Highest Audible Frequency Exam",

"questionMainText": "Bekesy Highest Audible Frequency",

"instructionText": "Press and hold the button only when you hear the tones.",

"responseArea": {

"type": "chaBHAFT",

"examProperties": {

"OutputChannel": "HPR0",

"UseSoftwareButton": true,

"PresentationMax": 50,

"Fstart": 8000

}

}

}

Options

Audiometry Page Properties may be defined on the PAGE, not within the

responseArea.examProperties:Type:

objectDescription: May contain any of the properties:

ToneRepetitionInterval:- Type:

integer - Description: Interval at which tones are presented, in ms. Overrides default inherited from

audiometryPropertiesproperties. (Default = 700, Maximum = 2000, Minimum = 450)

- Type:

L0:- Type:

number - Description: Nominal test level in dB SPL. Allowable range for test level should be from the minimum to the maximum of the levels defined in the calibration table. (Default = 80),

- Type:

ReversalDiscard:- Type:

integer - Description: Number of reversals to discard. (Default = 2, Maximum = 10, Minimum = 0)

- Type:

ReversalKeep:- Type:

integer - Description: Number of reversals to keep. (Default = 6, Maximum = 10, Minimum = 2, must be a multiple of 2)

- Type:

IncrementStartMultiplierFrequency:- Type:

number - Description: Frequency increment until

ReversalDiscard: multiply this byIncrementNominalFrequency. (Default = 2, Maximum = 10, Minimum = 1)

- Type:

IncrementNominalFrequency:- Type:

number - Description: Frequency increment after first reversal, in octaves. (Default = 0.08333, Maximum = 1, Minimum = 0.01)

- Type:

IncrementStartMultiplierLevel:- Type:

number - Description: Level increment until

ReversalDiscard: multiply this byIncrementNominalLevel. (Default = 2, Maximum = 10, Minimum = 1)

- Type:

IncrementNominalLevel:- Type:

number - Description: Level increment after first reversal, in dB. (Default = 4, Maximum = 10, Minimum = 0.5)

- Type:

MinimumOutputLevel:- Type:

number - Description: Minimum level that could be presented during exam, in dB SPL (allowable test levels are bounded by the minimum and maximum output levels defined in the calibration).

- Type:

exportToCSV:- Type:

boolean - Description: If

true, export the result to CSV upon submitting exam results. (Default =false)

- Type:

Response

For a chaBHAFT response area, result.response is a number corresponding to the threshold level in LevelUnits. The result object also contains:

result.Threshold = 65 // Threshold level (dB SPL)

result.ThresholdFrequency = 10275.318 // Threshold frequency (Hz)

result.F = [8000, 8979.696, ...] // Array of numbers indicating the frequencies presented

result.L = [65, 65, ...] // Array of numbers indicating the levels presented

Note that the Common Audiometry Responses will also be provided.

Schema

CRM Response Area

This response area is deprecated as of TabSINT version 4.4.0.

Run a WAHTS CRM test. This test assesses the subject's ability to hear a specific talker in a two-talker recording.

Note that the use of the CRM exam is restricted. Please contact tabsint@creare.com if you are interested in using this exam.

Protocol Example

{

"id": "CRM_exam",

"title": "CRM Response Area",

"questionMainText": "CRM Exam",

"questionSubText": "Your call sign is Baron",

"responseArea": {

"type": "chaCRM",

"autoBegin": true,

"verticalSpacing": 25,

"examProperties": {

"Level": 75,

"ConditionPresentations": [1,3,2,3,3,3]

}

}

}

Options

skip:- Type:

boolean - Description: If

true, allows user to skip the response area. (Default =false)

- Type:

autoSubmit:- Type:

boolean - Description: If

true, go straight to next page once this page is complete. (Default =false)

- Type:

autoBegin:- Type:

boolean - Description: If

true, go straight into exam, without having to press the 'Begin' button. (Default =false)

- Type:

feedBack:- Type:

boolean - Description: If

true, show the correct result after the subject responds or after the maximum time to wait for the user response is reached. (Default =true)

- Type:

examInstructions:- Type:

string - Description: Replaces the top-level instruction text on the WAHTS exam pages (each page after starting page).

- Type:

verticalSpacing:- Type:

integer - Description: Vertical spacing between buttons, given in [px]. (Default = 30)

- Type:

horizontalSpacing:- Type:

integer - Description: Horizontal spacing between buttons, given in [px]. (Default = 10)

- Type:

measureBackground:- Type:

string - Description: Method to use to measure background noise after an audiometry exam. Can be

ThirdOctaveBands.

- Type:

examProperties:Type:

objectDescription: Object containing the following options.

ConditionPresentations:Type:

arrayDescription: A tuple of the condition and the number of presentations of that condition to present, interleaved, for each condition to present (i.e. [condition, N presentations] ). (Default = [1, 5]).

- Condition 1: loud and soft speakers

- Condition 2: male and female speakers

- Condition 3: spatial separation between speakers

Level:- Type:

number - Description: Level at which to play the presentations (dB SPL). (Default = 80, Maximum = 115, Minimum = 0)

- Type:

UseMcl:- Type:

boolean - Description: If

true, the subject may modify the sound level using the joystick. TheLevelargument is ignored unless the MCL has not yet been set, in which case it is used as the initial level. (Default =false)

- Type:

MaxResponseTime:- Type:

number - Description: Maximum time, in seconds, to wait for a subject response. (Default = 8)

- Type:

Response

The chaCRM creates a result object for each presentation in ConditionPresentations.

For each presentation, the result.response is the string id of the selected button (e.g. "Blue 1"). Each result object contains Common Audiometry Responses as well as:

result.conditionCode = 2 // Condition of the presentation

result.correctColor = "Red" // String indicating the correct color for this presentation

result.correctNumber = 1 // Integer indicating the correct number for this presentation

result.wavFileName = "0023111821524600211_53.wav" // String name of the wav file for this presentation

result.responseTime = 5.935177 // Time (sec) between when the presentation ended and when the subject responded

result.correct = true // True if the subject responded correctly

There is a final result object that contains the percent correct over all of the presentations.

result.correctPercent = [1,1,2,1,3,1,1] // An array of size 2N + 1, where N is the number of conditions presented. The array contains condition codes and fraction correct for that condition, interleaved. The last value is the overall fraction correct.

Schema

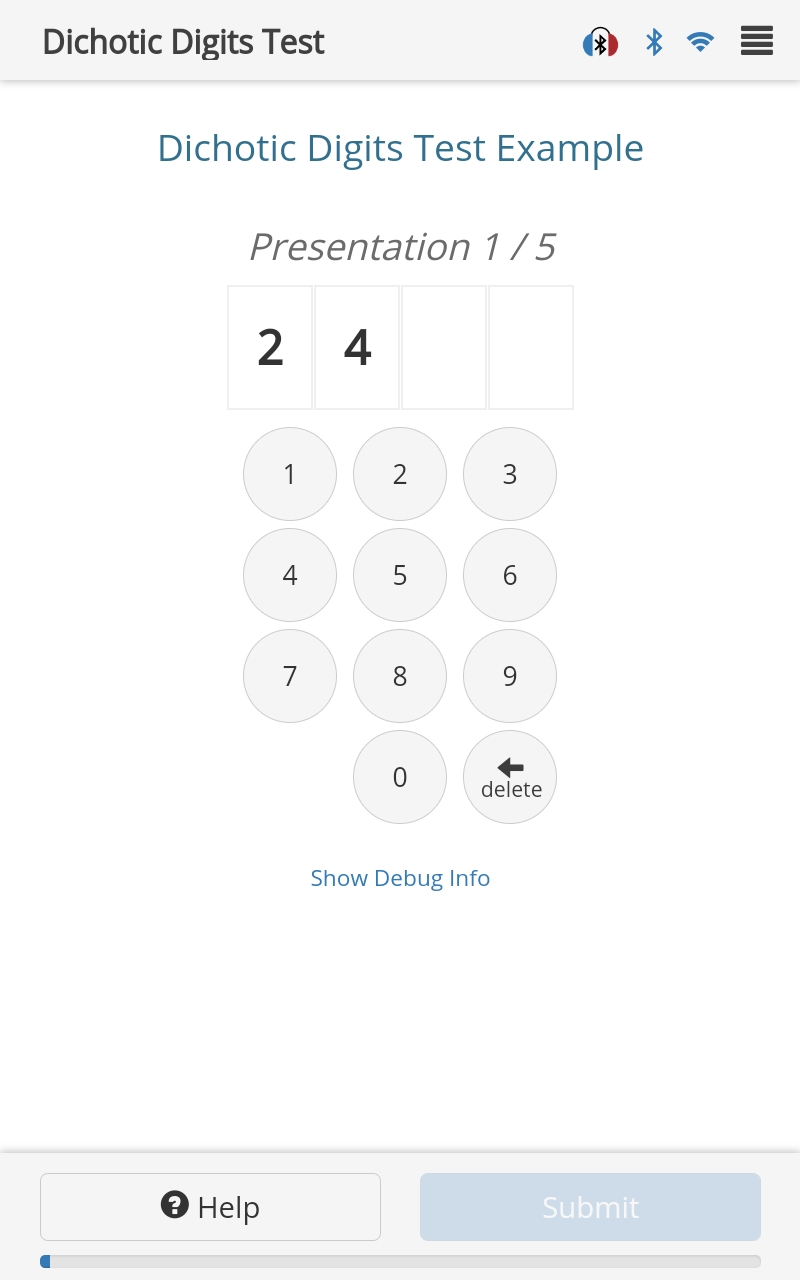

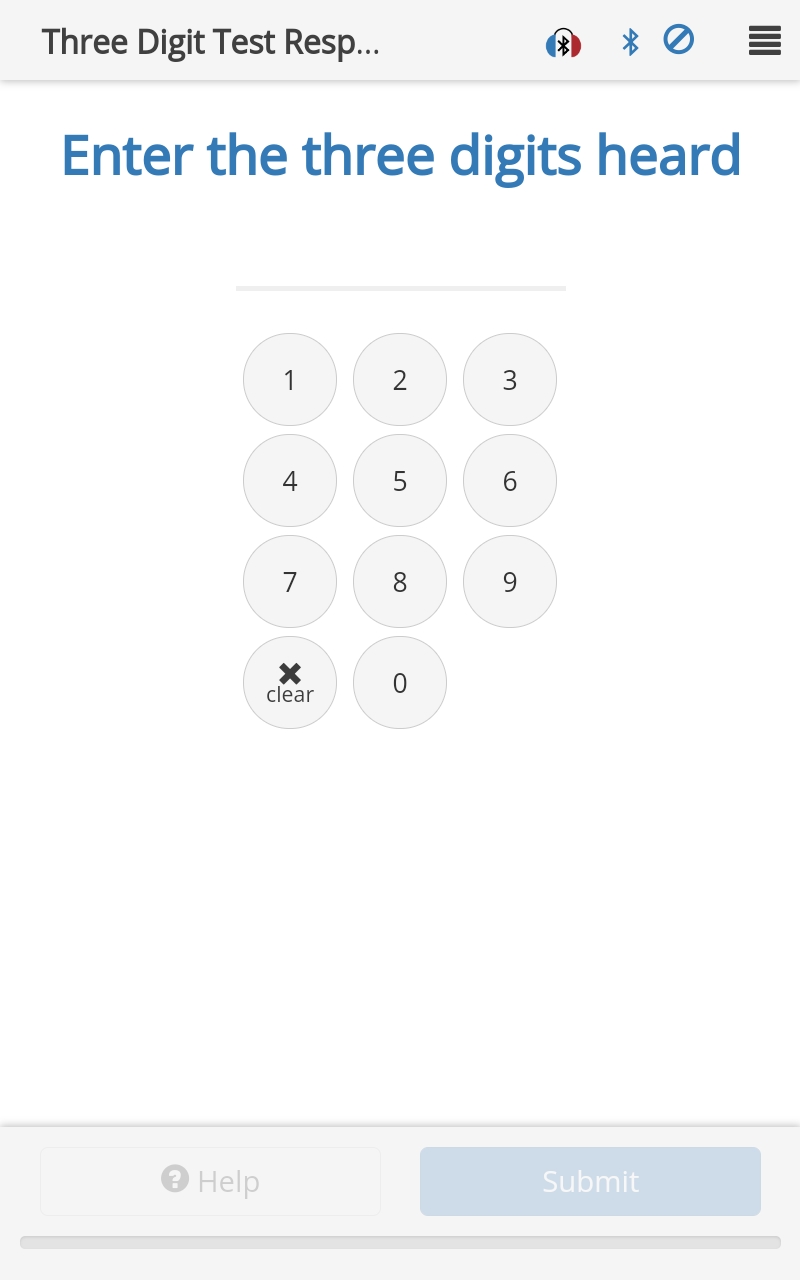

Dichotic Digits Response Area

This response area prepares a Dichotic Digits Test. The exam presents two digits in each ear and the subject repeats the digits they heard. This test measures the ability of a person to identify digits pairs presented dichotically.

Note that the use of the Dichotic Digits exam is restricted. Please contact tabsint@creare.com if you are interested in using this exam.

Protocol Example

{

"id": "dichoticDigits",

"title": "Dichotic Digits Test",

"questionMainText": "Dichotic Digits Test Example",

"helpText": "Follow instructions",

"instructionText": "",

"responseArea": {

"type": "chaDichoticDigits",

"examInstructions" : "Select the digits heard",

"examProperties": {

"NumberOfPresentations": 5,

"Language": "english",

"Level": 85

}

}

}

Options

autoBegin:- Type:

boolean - Description: Go straight into exam, without having to press the 'Begin' button. (Default = false)

- Type:

keypadDelay:- Type:

number - Description: Number of milliseconds to wait before activating the keypad. (Default = 10)

- Type:

feedback:- Type:

boolean - Description: Shows the user which digits were correct after each set of digits is entered. (Default = true)

- Type:

feedbackDelay:- Type:

number - Description: Number of milliseconds to show the digits after the presentation before clearing the keypad. This field can be used even when

feedbackis set tofalse. (Default = 1000)

- Type:

examInstructions:- Type:

string - Description: Replaces the top-level instruction text on the WAHTS exam pages (each page after starting page).

- Type:

examProperties:Type:

objectDescription: Object containing the following options.

NumberOfPresentations:- Type:

number - Description: Number of presentations in an exam. (Default = 20)

- Type:

Level:- Type:

number - Description: Level of presentations, in dB SPL. (Default = 50)

- Type:

Language:- Type:

string - Description: Language to use for the test media. Can be

spanishorenglish. (Default =spanish)

- Type:

Response

A Dichotic Digits exam generates a result object for each Dichotic Digits presentation. Within each object, result.response is an array containing the selected numbers. Each result object also contains:

result.presentationId = "dichoticDigits" // Same as the protocol page id

result.PresentationCount = 2 // Number of Dichotic Digits presentations

result.PresentedDigits = [9 5 8 3] // Array of digits presented (length of 4)

result.PresentedFile = "C:DD/DD9583.WAV" // Path to wav file presentation

result.PresentationScore = 75 // Percentage of digits correctly identified in the current presentation

result.response = [5 9 1 3] // Array of digits selected (length of 4)

There is a final result object summarizing the test results:

result.response = "Exam Results" // String indicating that this is the summary result for the Dichotic Digits exam

result.presentationId = "dichoticDigits" // Protocol page id

result.ScoreTotal = 80 // Calculated score percentage for all digits presented. ScoreTotal = (total number of digits identified /(NumberOfPresentations x 4)) x 100. Only valid in state DONE.

result.ScoreLeft = 70 // Calculated score percentage for digits presented to the LEFT ear only. ScoreLeft = (total number of digits that were presented to the LEFT ear and were correctly identified / (NumberOfPresentations x 2)) x 100. Only valid in state DONE.

result.ScoreRight = 90 // Calculated score percentage for digits presented to the RIGHT ear only. ScoreRight = (total number of digits that were presented to the RIGHT ear and were correctly identified / (NumberOfPresentations x 2)) x 100. Only valid in state DONE.

result.resultsFromCha.State = 2 // "Done" state

Schema

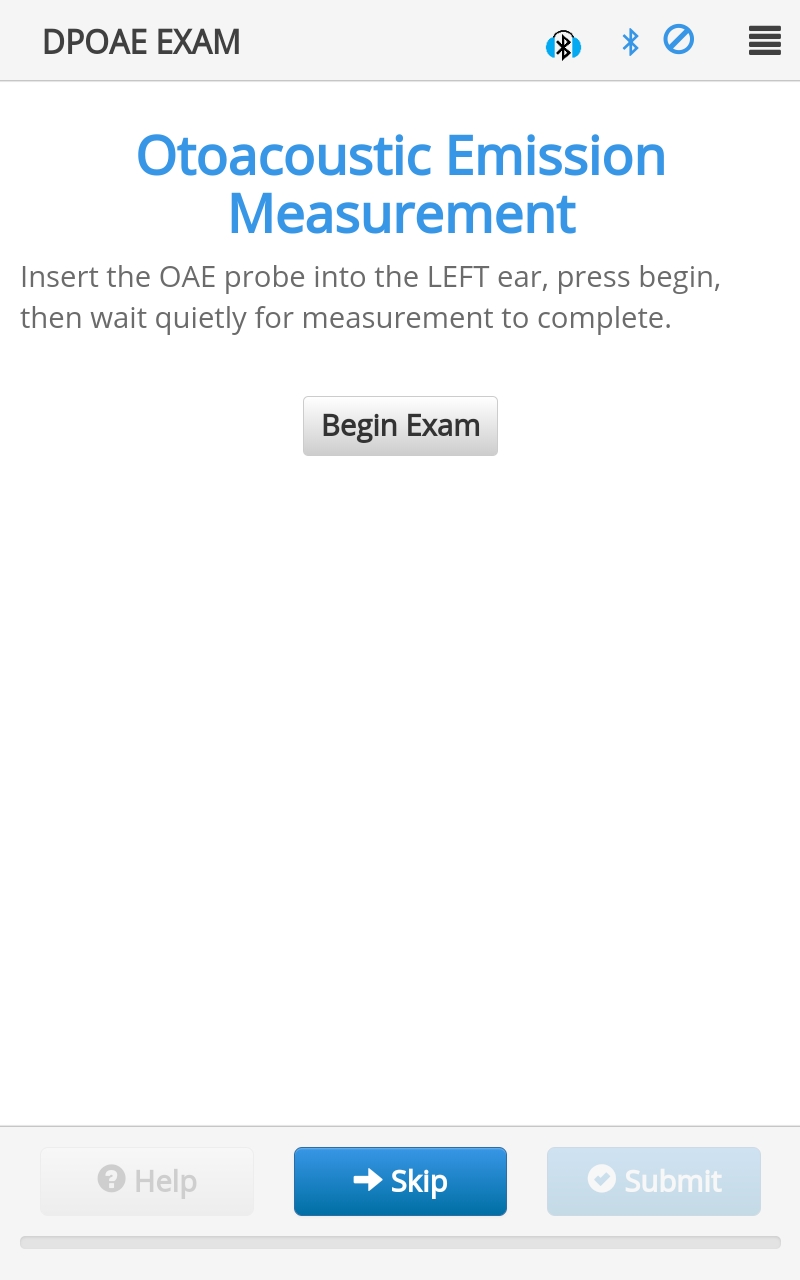

DPOAE Response Area

This response area prepares for a Distortion Product Otoacoustic Emissions examination (DPOAE). The WAHTS emits tones at two frequencies and listens for a response.

Protocol Example

{

"id": "DPOAE_LEFT",

"title": "DPOAE EXAM",

"questionMainText": "Otoacoustic Emission Measurement",

"instructionText":"Insert the OAE probe into the LEFT ear, press begin, then wait quietly for measurement to complete.",

"responseArea": {

"type":"chaDPOAE",

"skip": true,

"examProperties": {

"BlockSize": 8192,

"F1": 4000,

"F2": 6000,

"L1": 65,

"L2": 55,

"InputChannel": 3,

"DisableSpectrum": true,

"NoiseRejection": true

}

}

}

Options

skip:- Type:

boolean - Description: If

true, allows user to skip the exam. (Default =false)

- Type:

autoSubmit:- Type:

boolean - Description: If

true, go straight to the next page once this page is complete. (Default =false)

- Type:

autoBegin:- Type:

boolean - Description: If

true, go straight into the exam, without having to press the 'Begin' button. (Default =false)

- Type:

feedBack:- Type:

boolean - Description: Shows the correct result after the subject responds or after the maximum time to wait for the user response is reached.. (Default =

true)

- Type:

examInstructions:- Type:

string - Description: Replaces the top-level instruction text on the WAHTS exam pages (each page after starting page).

- Type:

measureBackground:- Type:

string - Description: Select a method from the list below to measure background noise after an audiometry exam, the one available option is ["ThirdOctaveBands"].

- Type:

plotProperties:Type:

objectDescription: Object containing the following option.

displayDPOAE:- Type:

array - Description: An array of strings indicating results to display. Option is

DPOAE.

- Type:

examProperties:Type:

objectDescription: Object containing the following options.

BlockSize:- Type:

integer - Description: The number of samples in a block used for the FFT (must be 1024 for use with the OAE Screener). (Default = 8192)

- Type:

Fl:- Type:

number - Description: Frequency of F1 (Hz). (Default = 833.33)

- Type:

F2:- Type:

number - Description: Frequency of F2 (Hz). (Default = 1000)

- Type:

Ll:- Type:

number - Description: Level of F1 (dB SPL). (Default = 65)

- Type:

L2:- Type:

number - Description: Level of F2 (dB SPL). (Default = 55)

- Type:

MinTestAverages:- Type:

number - Description: Minimum number of blocks that are averaged into the result before the test ends via the

MinDpNoiseFloorThreshcriterion. (Default = 60)

- Type:

MaxTestAverages:- Type:

number - Description: Maximum number of blocks to consider for averaging. (Default = 120, Minimum = 0, Maximum = 18750)

- Type:

InputChannel:- Type:

integer - Description: Input channel specifier. Defaults to 100, but 3 should be used for OAES devices.

- Type:

DisableSpectrum:- Type:

boolean - Description: If

true, disable FFT spectrum results. Defaults totrue(FFT disabled).

- Type:

MinDpNoiseFloorThresh:- Type:

number - Description: When the low DP exceeds the noise floor in the surrounding +/- NoiseHalfBandwidth Hz bins by this amount, the test will conclude (provided the MinTestAverages have been met). (Default = 10)

- Type:

NoiseHalfBandwidth:- Type:

number - Description: Bandwidth over which to calculate the noise floor in Hz. (Default = 30)

- Type:

NoiseRejection:- Type:

boolean - Description: If

true, the noise rejection algorithm is applied to discard noisy data blocks. Iffalse, all data is accepted. (Default =false)

- Type:

TransientDiscard:- Type:

number - Description: Initial period of data discarded at start of tone measured in ms. (Default = 21.3)

- Type:

Response

The result.response from a chaDPOAE response area contains

DpLow.Frequency = # // The actual frequency (Hz) of the measurement.

DpLow.Amplitude = # // The amplitude (dB SPL) measured at the microphone at the Frequency.

DpLow.Phase = # // The phase (rad) measured at the microphone at the Frequency.

DpLow.NoiseFloor = # // The amplitude (dB SPL) of the noise floor in the ±3 FFT frequencies [2] around the measurement frequency. Only calculated for Distortion Products; others shall be zero.

DpHigh.Frequency = # // The actual frequency (Hz) of the measurement.

DpHigh.Amplitude = # // The amplitude (dB SPL) measured at the microphone at the Frequency.

DpHigh.Phase = # // The phase (rad) measured at the microphone at the Frequency.

DpHigh.NoiseFloor = # // The amplitude (dB SPL) of the noise floor in the ±3 FFT frequencies [2] around the measurement frequency. Only calculated for Distortion Products; others shall be zero.

F1.Frequency = # // The actual frequency (Hz) of the measurement.

F1.Amplitude = # // The amplitude (dB SPL) measured at the microphone at the Frequency.

F1.Phase = # // The phase (rad) measured at the microphone at the Frequency.

F2.Frequency = # // The actual frequency (Hz) of the measurement.

F2.Amplitude = # // The amplitude (dB SPL) measured at the microphone at the Frequency.

F2.Phase = # // The phase (rad) measured at the microphone at the Frequency.

TestAverages = # // The actual number of blocks averaged into the result (important data for noise rejection.)

examProperties.BlockSize = # // The number of samples used for the FFT.

examProperties.F1 = # // The actual frequency (Hz) used as F1.

examProperties.F2 = # // The actual frequency (Hz) used as F2.

examProperties.L1 = # // The actual amplitude (dB SPL) used as L1.

examProperties.L2 = # // The actual amplitude (dB SPL) used as L2.

channel = "string" // The input channel specifier.

Schema

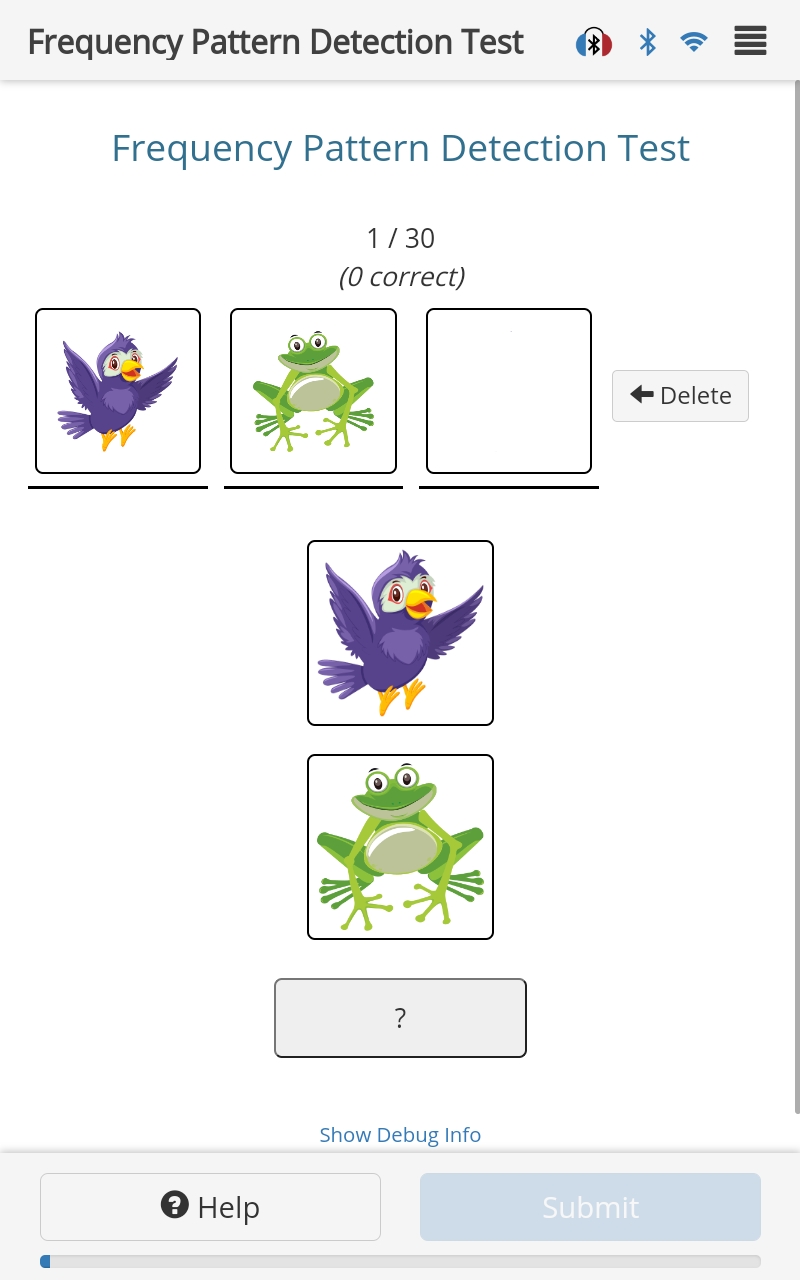

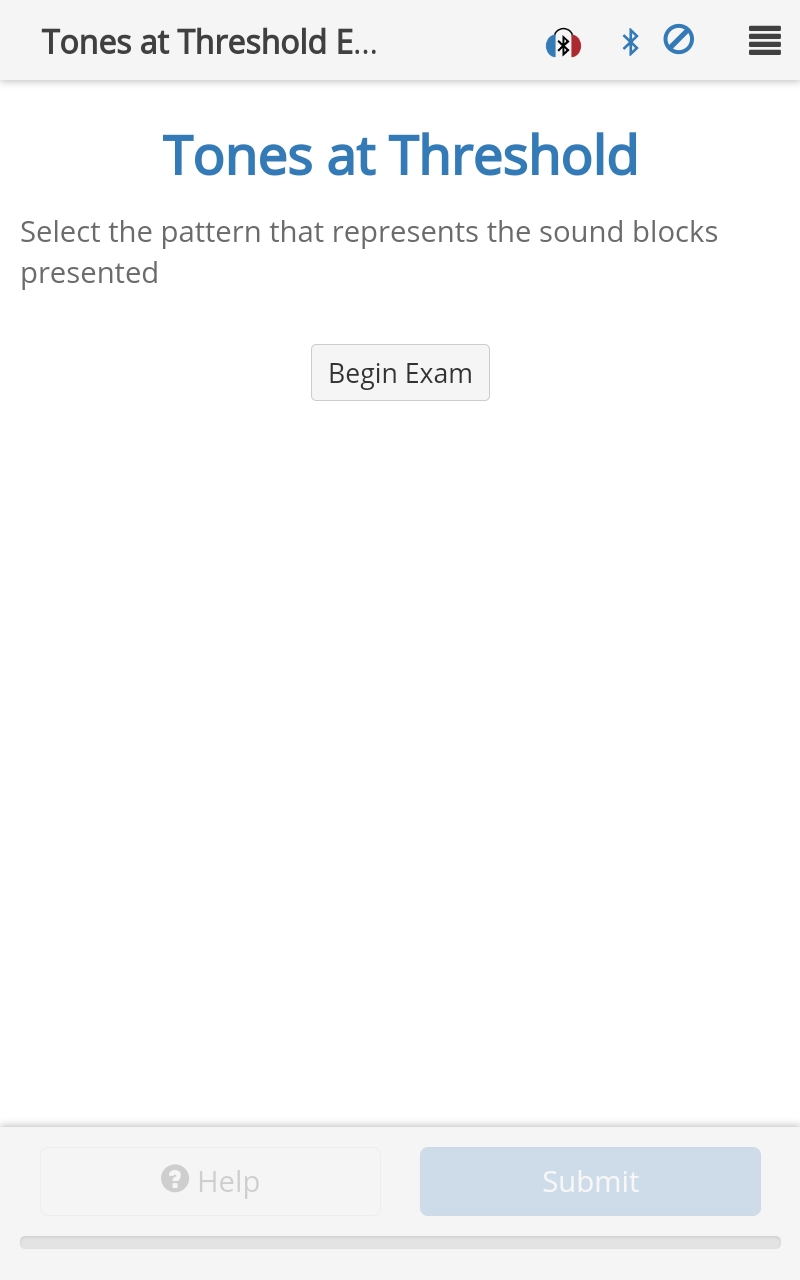

Frequency Pattern Detection Response Area

Use this response area to administer a Frequency Pattern Detection Test, a test that measures the ability of a person to detect the frequency pattern of the presentation.

Protocol Example

{

"id": "FrequencyPattern",

"title": "Frequency Pattern Detection Test",

"questionMainText": "Frequency Pattern Detection Test",

"instructionText":"Enter the pattern as heard",

"responseArea":{

"type": "chaFrequencyPattern"

}

}

Options

autoBegin:- Type:

boolean - Description: If

true, go straight into the exam, without having to press the 'Begin' button. (Default =false)

- Type:

feedback:- Type:

boolean - Description: If

true, show the user whether they answered each frequency correctly. (Default =true)

- Type:

feedbackDelay:- Type:

number - Description: Number of milliseconds to show the feedback after the presentation before starting the next presentation. (Default = 1000)

- Type:

examProperties:Type:

objectDescription: Object containing the following options.

Channel:- Type:

number - Description: Channel to be used, where 0 = Left, 1 = Right, 2 = Both. (Default = 0)

- Type:

NumberOfPresentations:- Type:

number - Description: Number of presentations. (Default = 30, Minimum = 0, Maximum = 40)

- Type:

Level:- Type:

number - Description: Level of tones, set by calibration, in dB SPL (Default = 75, Minimum = 0, Maximum = 100)

- Type:

Response

A Frequency Pattern Detection exam generates a result object for each Frequency Pattern presentation. Within each object, result.response is a number to represent the frequencies identified, where a high frequency is denoted by 2, a low frequency is denoted by 1, and unsure is denoted by 0. For example, HLL as 211. Each result object also contains:

result.presentationId = "FrequencyPattern" // Same as the protocol page id

result.state = 1 // 1 = In Progress, 2 = Done

result.presentationCount = 15 // Number of presentations

result.presentedPattern = 212 // Frequency pattern that was presented

result.correct = 0 // Returns true if Response matches PresentedPattern

result.response = 121 // Frequency pattern selected/entered

There is a final result object summarizing the test results:

result.response = "Exam Results" // String indicating that this is the summary result for the HINT exam

result.presentationId = "FrequencyPattern" // Protocol page id with "_Results" appended

result.presentationCount = 30 // Number of presentations completed

result.numberOfReversals = 3 // Total number of reversals (where the pattern selected was the exact inverse of what was presented, for example LHH instead of HLL). Only valid is state DONE

result.score = 83.3 // Calculated score percentage. Only valid in state DONE

result.resultsFromCha.State = 2 // "Done" state

Schema

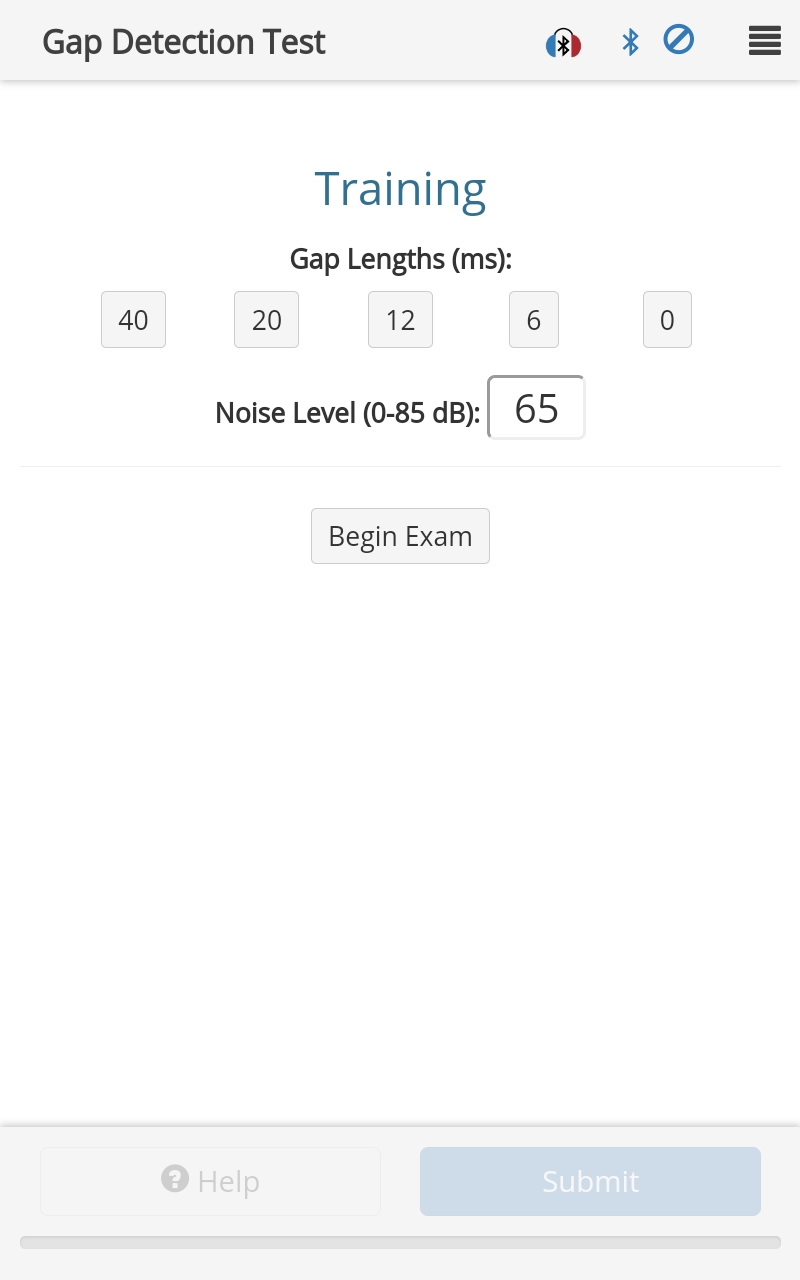

GAP Response Area

Use this response area to administer a Gap Detection Test, a test that measures the subject's ability to detect silent gaps in white noise.

Protocol Example

{

"id": "Demonstration",

"title": "Gap Detection Test",

"responseArea": {

"type":"chaGAP",

"training": true,

"examProperties": {

"TimePres": 3000,

"LNoise": 50,

"NPresMax": 40

}

}

}

Options

skip:- Type:

boolean - Description: If

true, allow the user to skip the response area. (Default =false)

- Type:

autoSubmit:- Type:

boolean - Description: If

true, go straight to next page once this page is complete. (Default =false)

- Type:

autoBegin:- Type:

boolean - Description: If

true, go straight into the exam, without having to press the 'Begin' button. (Default =false)

- Type:

feedBack:- Type:

boolean - Description: If

true, show the correct result after the subject responds or after the maximum time to wait for the user response is reached. (Default =false)

- Type:

feedbackDelay:- Type:

number - Description: Number of milliseconds to show the digits after the presentation before clearing the keypad. This field can be used even when

feedbackis set tofalse. (Default = 1000)

- Type:

training:- Type:

boolean - Description: If

true, present the training exam. (Default =false)

- Type:

examInstructions:- Type:

string - Description: Replaces the top-level instruction text on the WAHTS exam pages (each page after starting page).

- Type:

measureBackground:- Type:

string - Description: Method to use to measure background noise after an audiometry exam. Can be

ThirdOctaveBands.

- Type:

examProperties:Type:

objectDescription: Object containing the following options.

Channel:- Type:

number - Description: Channel to be used, where 0 = Left, 1 = Right, 2 = Both. (Default = 0)

- Type:

TimePres:- Type:

number - Description: Total length of each noise presentation in ms. (Default = 4000, Minimum = 0, Maximum = 40000)

- Type:

LNoise:- Type:

number - Description: Presentation level in dBA. (Default = 65, Minimum = 0, Maximum = 85)

- Type:

AllowableGapLengths:- Type:

array - Description: An array of numbers representing a list of allowable gap lengths, in ms, up to 30 items long. The minimum number of items in this array is 1 and the values must be between 0 and 100 (inclusive). (Default = [70,60,50,45,40,35,30,25,20,16,13,10,7,4,2,1])

- Type:

TimeLead:- Type:

number - Description: Length of leading delay before gap can be inserted, in ms. (Default = 1000, Minimum = 0, Maximum = 2000)

- Type:

TimeTrail:- Type:

number - Description: Length of trailing delay needed after gap, in ms. (Default = 1000, Minimum = 0, Maximum = 2000)

- Type:

TimeWindow:- Type:

number - Description: Length of window during which response can be accepted, in ms. (Default = 850, Minimum = 0, Maximum = 2000)

- Type:

TimeNoResp:- Type:

number - Description: Delay between beginning of gap and beginning of response window, in ms. (Default = 100, Minimum = 0, Maximum = 200)

- Type:

TimePause:- Type:

number - Description: Elapsed time between presentation, in ms. (Default = 1000, Minimum = 0, Maximum = 5000)

- Type:

GapLengthStartIndex:- Type:

number - Description: Index into

AllowableGapLengthsfor initial gap length value. (Default = 8, Minimum = 0, Maximum = 29)

- Type:

NReversalsCalc:- Type:

number - Description: Number of reversals to use in computation of threshold. (Default = 8, Minimum = 1, Maximum = 10)

- Type:

NReversals:- Type:

number - Description: Number of reversals before test ends. (Default = 10, Minimum = 1, Maximum = 20)

- Type:

NLowestReversals:- Type:

number - Description: Number of lowest pairwise reversal averages to track for second threshold computation. (Default = 3, Minimum = 0, Maximum = 10)

- Type:

NPresMax:- Type:

number - Description: Maximum number of presentations to use before aborting the exam. (Default = 120, Minimum = 1, Maximum = 200)

- Type:

NHits:- Type:

number - Description: Number of consecutive hits (correct answers) necessary before reducing gap length. (Default = 2, Minimum = 1, Maximum = 3)

- Type:

NMiss:- Type:

number - Description: Number of consecutive misses (incorrect or no answers) necessary before increasing gap length. (Default = 2, Minimum = 1, Maximum = 3)

- Type:

NPresCheck:- Type:

number - Description: Number of consecutive presentations with same gap length allowed. (Default = 5, Minimum = 1, Maximum = 8)

- Type:

MaxFreq:- Type:

number - Description: Maximum frequency used to generate the white noise, in Hz. When calibration loaded to create filter coefficients, the table is truncated to include only those rows whose frequencies are less than or equal to MaxFreq. (Default = 16000, Minimum = 4000, Maximum = 16000)

- Type:

UseSoftwareButton:- Type:

boolean - Description: Uses a software submission instead of the mechanical Button. (Default = false)

- Type:

SendFullResults:- Type:

number - Description: When to transmit thresholds and array results. Available choices are: 0 = Always, 1 = Sometimes, 2 = Never. (Default = 0)

- Type:

SemiAutomaticMode:- Type:

boolean - Description: If true, pause after each pulse train to wait for a response. If false, proceed in a fully automated fashion. (Default = false)

- Type:

Response

The contents of the result object depends on the value of the SendFullResults parameter and the State of the exam. The following result items are always included:

result.State = "SUCCESS" // Either "IN PROGRESS," "SUCCESS" (if the exam ended and thresholds were calculated), or "FAILED" (if the exam terminated abnormally)

result.PresentationCount = 32 // Number of completed presentations

result.HitOrMiss = 1 // Integer of 1 (True) if the gap was successfully detected or 0 (False) if either (a) no response or (b) response outside the the response window (for current presentation)

result.CurrentGapStartTime = 1907.3019 // Location of the gap within the noise for current presentation, from the start of the noise (ms)

result.CurrentGapLength = 6.9999995 // Gap length for current presentation (ms) (same as last element in result.GapLengthArray).

result.CurrentTimeResp = 360.22913 // Response time after end of gap (ms) for current presentation (-1 if no response) (same as last element in result.TimeRespArray).

result.PlayPosition = 4000.5413 // Time elapsed since the beginning of the noise presentation (ms)

result.ActualMaxFreq = 48000 // Actual maximum frequency (Hz) used by the calibration routine in generating the FIR filter

If SendFullResults is 0 (or 1 AND the State is not IN PROGRESS), the following items will also be included in the result object:

result.GapThreshold = 5.4999995 // Average gap length (ms) calculated based on last NreversalsCalc reversals (NaN if threshold can't be computed)

result.GapLowestThreshold = 5.4999995 // Average of the shortest NLowestReversals pairwise averages of the NReversals reversals (exclude first reversal if NReversals is odd) (NaN if threshold can't be computed or NLowestReversals is zero)

result.GapLengthArray = [19.999998,19.999998, ...] // Array of gap lengths for each presentation (ms)

result.TimeRespArray = [357.73956,324.5833, ...] // Array of response times (ms) for each presentation (-1 if no response)

result.HitOrMissArray = [true,true, ...] // Boolean array indicating true if the gap was successfully detected, or false if either (a) no response or (b) response outside the the response window for each presentation.

result.ReversalUsedForThresholdArray = [false,false, ...] // Boolean array indicating true if the presentation was a reversal used to calculate the threshold or false otherwise

Schema

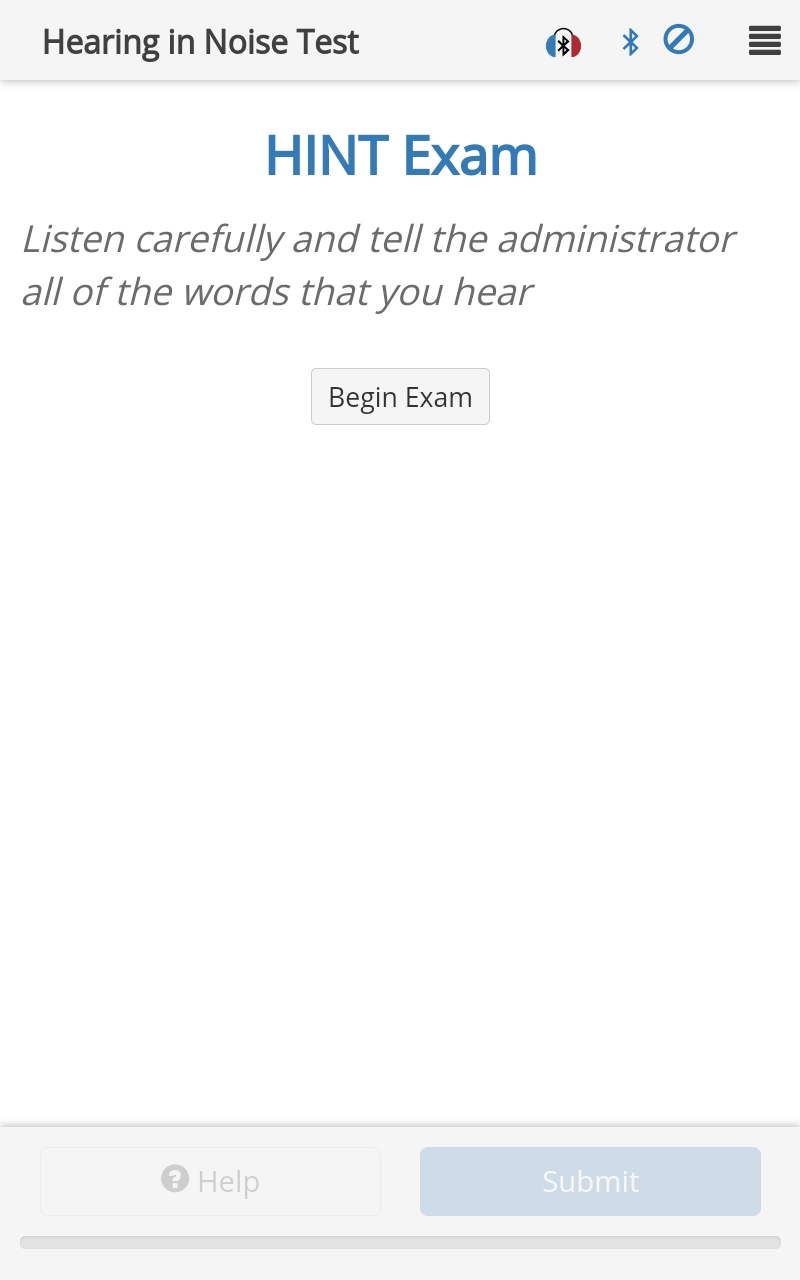

HINT Response Area

Run a Hearing in Noise Test (HINT) exam.

Note that the use of the HINT exam is restricted. Please contact tabsint@creare.com if you are interested in using this exam.

Protocol Example

{

"id": "chaHINT",

"title": "Hearing in Noise Test",

"questionMainText":"HINT Exam",

"questionSubText":"Listen carefully and tell the administrator all of the words that you hear",

"responseArea": {

"type": "chaHINT",

"examProperties": {

"Language": "english",

"ListNumber": 1,

"NumberOfPresentations": 10

}

}

}

Options

skip:- Type:

boolean - Description: If

true, allow the user to skip the response area. (Default =false)

- Type:

autoSubmit:- Type:

boolean - Description: If

true, go straight to next page once this page is complete. (Default =false)

- Type:

autoBegin:- Type:

boolean - Description: If

true, go straight into the exam, without having to press the 'Begin' button. (Default =false)

- Type:

examInstructions:- Type:

string - Description: Replaces the top-level instruction text on the WAHTS exam pages (each page after starting page).

- Type:

measureBackground:- Type:

string - Description: Method to use to measure background noise after an audiometry exam. Can be

ThirdOctaveBands.

- Type:

examProperties:Type:

objectDescription: Object containing the following options:

Language:- Type:

string - Description: Language to use for HINT test (can be

english,mandarin,military,swahili,laspanish(Latin American Spanish) orportuguese). (Default =english)

- Type:

IsPractice:- Type:

boolean - Description: If

true, run as a practice exam using the practice lists. (Default =false)

- Type:

Direction:- Type:

string - Description: Noise direction, can be

front,left,rightorquiet. (Default =front)

- Type:

NoiseLevel:- Type:

number - Description: Absolute level at which noise is played, in dBA SPL. (Default = 65, Minimum = 0, Maximum = 85)

- Type:

InitialStepSize:- Type:

number - Description: Change in SNR for first 4 presentations, in dB. (Default = 4, Minimum = 0, Maximum = 20)

- Type:

StepSize:- Type:

number - Description: Change in SNR after each response (after the first 4 presentations), in dB. (Default = 2, Minimum = 0, Maximum = 20)

- Type:

InitialSNR:- Type:

number - Description: SNR for the first presentation, in dB. See below for handling of

NaN. (Default =NaN, Minimum = -20, Maximum = 20)- If

NaNand:Direction = front, thenInitialSNR = 0Direction = left, thenInitialSNR = -5Direction = right, thenInitialSNR = -5Direction = quiet, thenInitialSNR = 20 - NoiseLevel

- If

- Type:

ListNumber:- Type:

number - Description: One-based index of the list to use, where

0selects the list randomly. (Default = 0, Minimum = 0, Maximum = 12)

- Type:

NumberOfPresentations:- Type:

number - Description: Number of presentations. (Default = 20, Minimum = 10, Maximum = 150)

- Type:

DisableRepeatFirstUntilCorrect:- Type:

boolean - Description: By default, this exam will repeat the first presentation until the subject gets it correct. If this field is

true, the exam will not repeat the first presentation until the subject gets it correct. (Default =false)

- Type:

Response

A HINT exam generates a result object for each HINT presentation. Within each object, result.response is an array containing the zero-based indices of the selected words. Each result object also contains:

result.presentationId = "chaHINT" // Same as the protocol page id

result.State = 0 // 0 = Playing, 1 = Loading, 2 = Waiting for Result, 3 = Done

result.SentencePath = "C:HINT/LIST1/TIS019.WAV" // String indicating the file name of the current presentation

result.CurrentSentence = "(A/The) mailman brought (a/the) letter." // String representation of the current presentation

result.ListLength = 10 // Number of presentations

result.CurrentSentenceIndex = 4 // Zero-based index of the current presentation.

result.sSRT = -3.2 // Calculated sentence speech reception threshold (valid only when State = DONE)

result.sSRTstd = 0 // Standard deviation of the SNRs used to calculate sSRT (valid only when State = DONE)

result.CurrentSNR = -3.2 // SNR (dB) of the current sentence

result.selectedWords = ["(A/The)","mailman"] // Array of strings indicating the words selected from the presentation

result.numberCorrect = 2 // Number of words identified correctly

result.wordCount = 5 // Total number of words in the presentation

result.responseToCha = 3 // Bit field representation of the words which are correct in the sentence. If the word is correct, the bit is 1; otherwise it is zero. The least significant bit corresponds to the first word.

There is a final result object summarizing the test results:

result.response = "Exam Results" // String indicating that this is the summary result for the HINT exam

result.presentationId = "chaHINT_Results" // Protocol page id with "_Results" appended

result.presentationCount = 10 // Number of presentations completed

result.correctPresentationCount = 7 // Number of presentations for which the user correctly identified all of the words

result.resultsFromCha.State = 2 // "Done" state

result.resultsFromCha.SentencePath = "C:HINT/LIST1/TIS010.WAV" // String indicating the file name of the last presentation

result.resultsFromCha.CurrentSentence = "(A/The) car (is/was) going too fast." // String representation of the last presentation

result.resultsFromCha.ListLength = 10 // Number of presentations

result.resultsFromCha.CurrentSentenceIndex = 10 // One greater than the zero-based index of the last presentation (equal to ListLength)

result.resultsFromCha.sSRT = -8.971428 // Average of the SNRs of presentations 5 through (NumberOfPresentations + 1) where the SNR of presentation (NumberOfPresentations + 1) is what the SNR would have been should it have been presented (valid only when State = DONE)

result.resultsFromCha.sSRTstd = 1.3997027 // Standard deviation of the SNRs used to calculate sSRT (valid only when State = DONE)

result.resultsFromCha.CurrentSNR = -10.4 // SNR (dB) of the next presentation, if it were to be presented

Schema

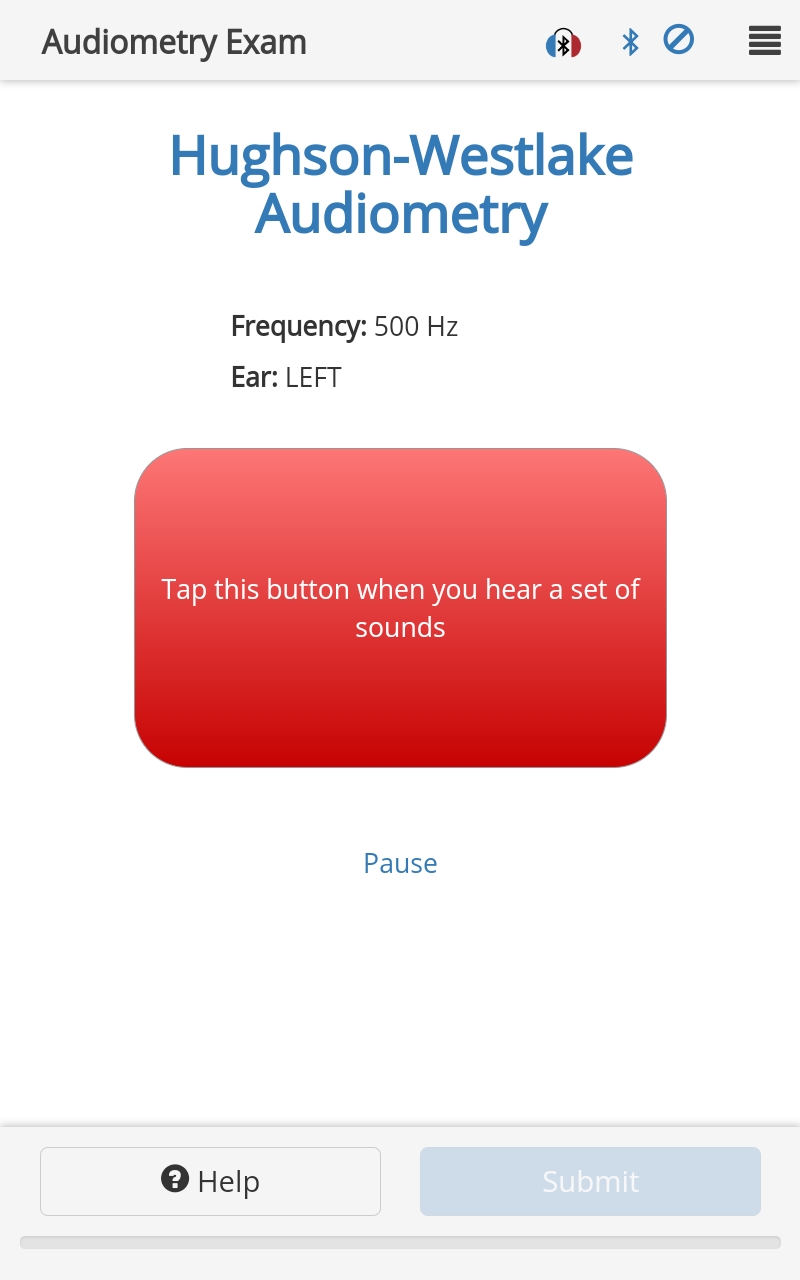

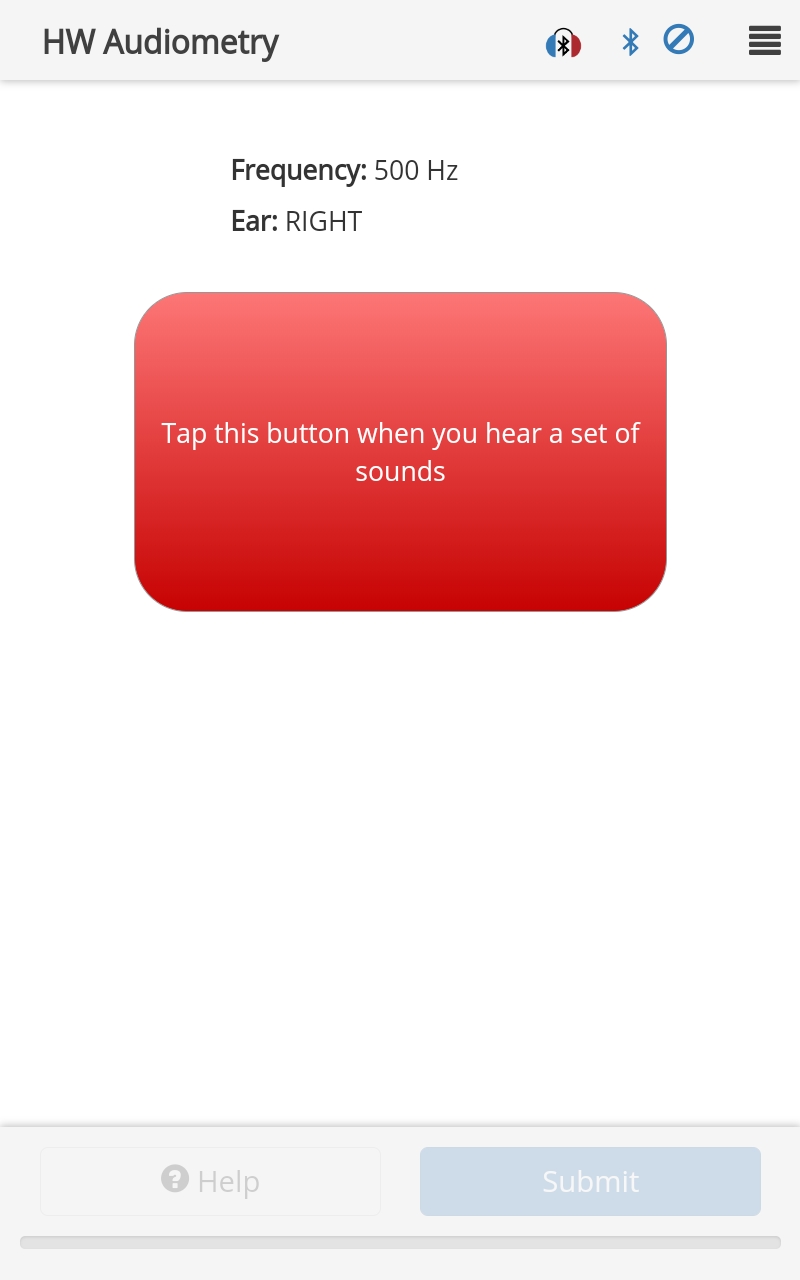

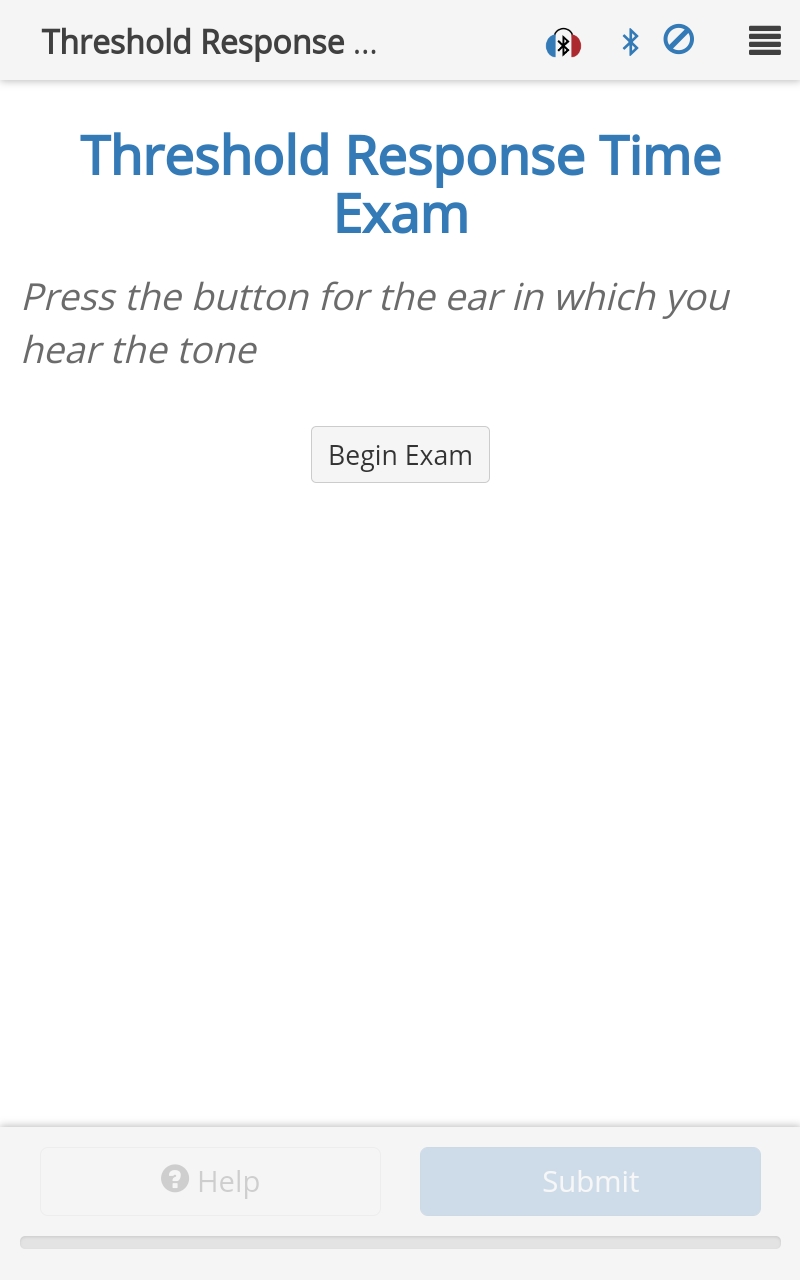

Hughson Westlake Response Area

A response area for performing a Hughson Westlake level threshold exam.

Protocol Example

{

"id": "Hughson Westlake",

"title": "HW Audiometry",

"responseArea": {

"type": "chaHughsonWestlake",

"autoSubmit": true,

"examProperties": {

"F": 500,

"Lstart": 30,

"TonePulseNumber": 3,

"UseSoftwareButton": true,

"LevelUnits": "dB HL",

"OutputChannel": "HPR0"

}

}

}

Options

Audiometry Page Properties may be defined on the PAGE, not within the

responseArea.examProperties:- Type:

object - Description: May contain any of the properties from Hughson Westlake Exam Properties

- Type:

exportToCSV:- Type:

boolean - Description: If

true, export the result to CSV upon submitting exam results. (Default =false)

- Type:

Response

The result.response is a number corresponding to the threshold level in LevelUnits. The result object also contains the Common Audiometry Responses and:

result.L = [30,15, ...] // Array of levels presented

result.RetSPL = 15 // Reference Equivalent Threshold Sound Pressure Level (RetSPL) at the test frequency

result.FalsePositive = [0,0, ...] // Array of numbers indicating the number of responses to each presentation that occurred outside the polling time window (may be 0, 1, 2 or 3 where 3 indicates 3+)

result.NumCorrectResp = 0 // Number of presentations correctly answered (only used when Screener = true)

result.ResponseTime = [859,489, ...] // Array of numbers indicating the response time (ms) to each presentation (no response recorded as 0)

Schema

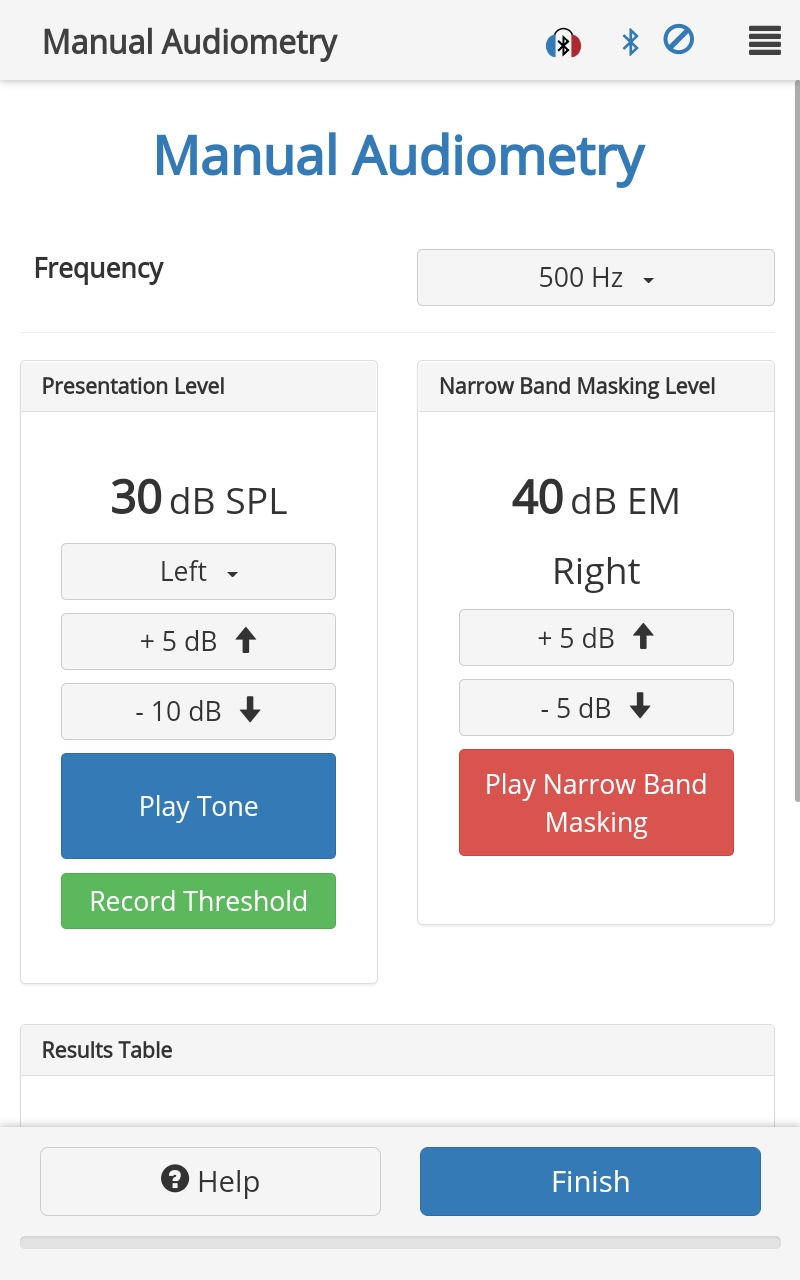

Manual Audiometry Response Area

Use this response area to run a manual audiometry exam.

Protocol Example

{

"id": "ManualAudiometry",

"title": "Manual Audiometry",

"questionMainText": "Manual Audiometry",

"submitText": "Finish",

"helpText": "[Manual Audiometry Instructions]",

"responseArea": {

"type": "chaManualAudiometry",

"minLevel": -20,

"maxLevel": 80,

"presentationList": [

{

"F": 500

},

{

"F": 1000

},

{

"F": 2000

},

{

"F": 4000

},

{

"F": 6000

},

{

"F": 8000

}

],

"examProperties": {

"LevelUnits": "dB SPL",

"Lstart": 30,

"TonePulseNumber": 5,

"OutputChannel": "HPL0",

"UseSoftwareButton": true,

}

}

}

Options

examInstructions:- Type:

string - Description: Replaces the top-level instruction text on the WAHTS exam pages (each page after starting page).

- Type:

boneConduction:- Type:

boolean - Description: If

true(and using a compatible WAHTS), enable output to the bone conductor. (Default =false)

- Type:

showPresentedTones:- Type:

boolean - Description: If

true, show the tone presented at each frequency on a separate chart. (Default =false)

- Type:

audiometryType:- Type:

string - Description: Which type of audiometry exam to run, can be

HughsonWestlake. (Default =HughsonWestlake)

- Type:

minLevel:- Type:

number - Description: Minimum level the user can select for playing tones (hardware limited). (Default = -80)

- Type:

maxLevel:- Type:

number - Description: Maximum level the user can select for playing tones (hardware limited). (Default = 100)

- Type:

minMaskingLevel:- Type:

number - Description: Minimum level the user can select for masking noise (hardware limited). (Default = -80)

- Type:

maxMaskingLevel:- Type:

number - Description: Maximum level the user can select for masking noise (hardware limited). (Default = 80)

- Type:

onlySubmitFrequenciesTested:- Type:

boolean - Description: If

true, only generate results for frequencies tested. (Default =false)

- Type:

exportToCSV:- Type:

boolean - Description: If

true, export the result to CSV upon submitting exam results. (Default =false)

- Type:

presentationList:Type:

arrayDescription: An array of objects defining the frequencies to run. Each object may contain:

id:- Type:

string - Description: Custom

presentationIdto use for the result object for that frequency.

- Type:

examProperties:Type:

objectDescription: Object which may contain any of the following:

Response

The chaManualAudiometry response area generates a result object for each frequency and output channel combination. If onlySubmitFrequenciesTested is true, result objects will only be recorded for the frequency/output channel combinations tested.

Within each object, result.response is a number corresponding to the threshold level, in LevelUnits (or NaN if the frequency/ear combination wasn't tested and onlySubmitFrequenciesTested is false). Each result object also contains the Common Audiometry Responses and:

result.ResponseType = "threshold" // String indicating the type of response

result.presentationIndex = 5 // 0-based index of presentation within the `presentationList`

result.RetSPL = 0 // Reference Equivalent Threshold Sound Pressure Level (RetSPL) at the test frequency

result.L = [30, 35, 40] // Array of levels presented

Schema

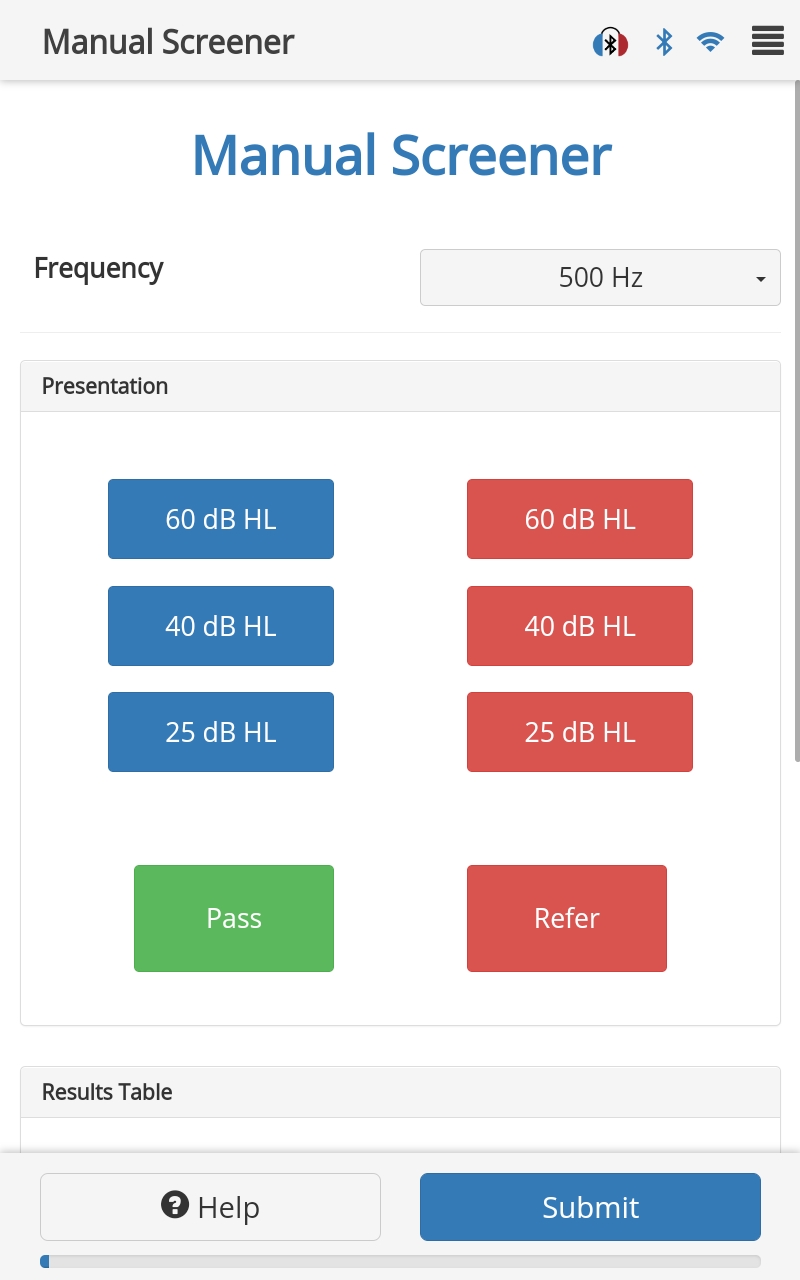

Manual Screener Response Area

Use this response area to run a manual screener exam.

Protocol Example

{

"id": "ManualScreener",

"responseArea": {

"type": "chaManualScreener",

"levels": [60, 40, 20],

"presentationList": [

{

"F": 500

},

{

"F": 1000

},

{

"F": 2000

}

],

"examProperties": {

"LevelUnits": "dB HL",

"TonePulseNumber": 5,

"UseSoftwareButton": true,

"PollingOffset": 1000,

"MinISI":1000,

"MaxISI":3000

}

}

}

Options

examInstructions:- Type:

string - Description: Replaces the top-level instruction text on the WAHTS exam pages (each page after starting page).

- Type:

audiometryType:- Type:

string - Description: Which type of audiometry exam to run, can be

HughsonWestlake. (Default =HughsonWestlake)

- Type:

onlySubmitFrequenciesTested:- Type:

boolean - Description: If

true, only generate results for frequencies tested. (Default =false)

- Type:

levels:- Type:

array - Description: Array of levels to run. Does not support DynamicStartLevel. Maximum length of array is 3. (Default =

[60, 40, 25])

- Type:

presentationList:Type:

arrayDescription: An array of objects defining the frequencies to run. Each object may contain:

id:- Type:

string - Description: Custom

presentationIdto use for the result object for that frequency.

- Type:

examProperties:Type:

objectDescription: Object which may contain any of the following:

Response

The chaManualScreener response area generates a result object for each frequency, level and output channel combination. If onlySubmitFrequenciesTested is true, result objects will only be recorded for the frequency/output channel combinations tested.

Within each object, result.response is P for pass, R for refer or - if the response was not recorded. Each result object also contains:

result.Units = "dB HL" // String giving the units of the Threshold

result.ResponseType = "pass-fail" // String indicating the type of response

result.presentationIndex = 5 // 0-based index of presentation within the `presentationList`

result.RetSPL = 0 // Reference Equivalent Threshold Sound Pressure Level (RetSPL) at the test frequency

result.L = 30 // Screening level (in result.Units).

result.F = 500 // Screening frequency in Hz.

Schema

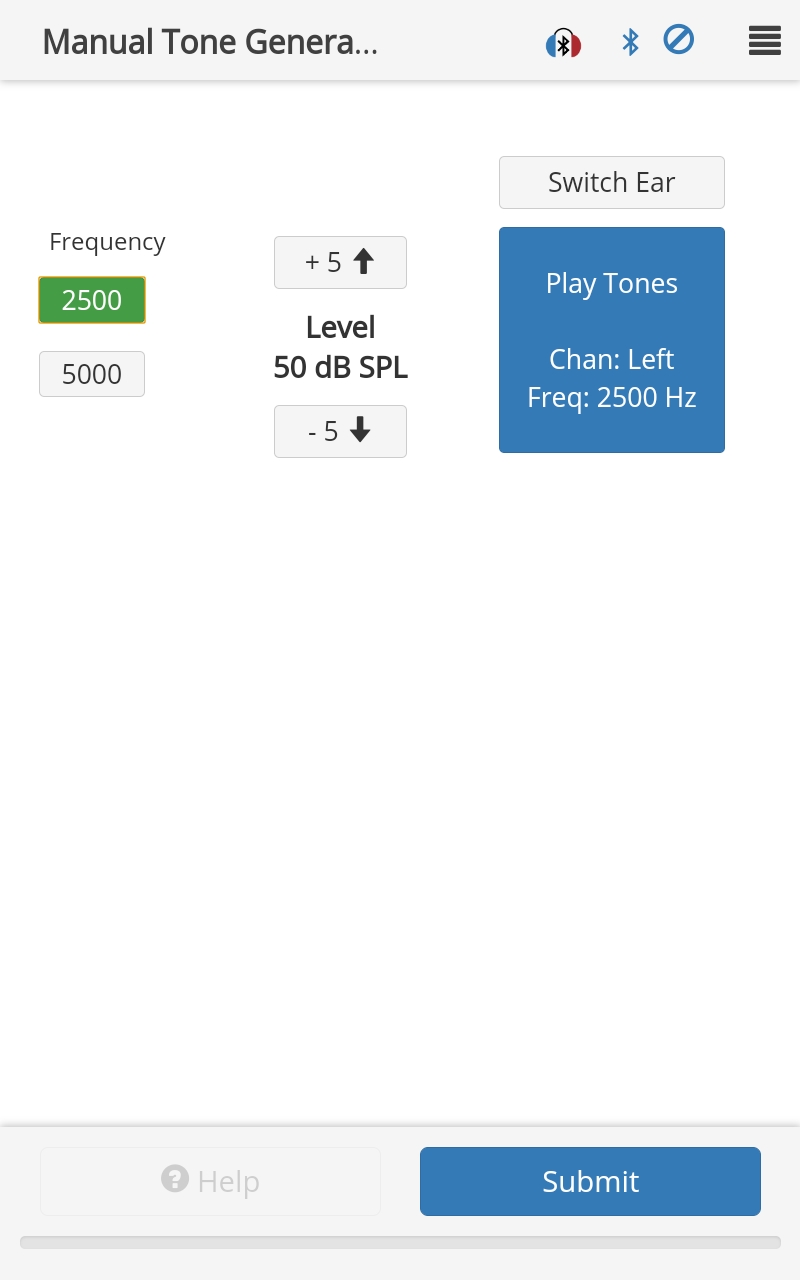

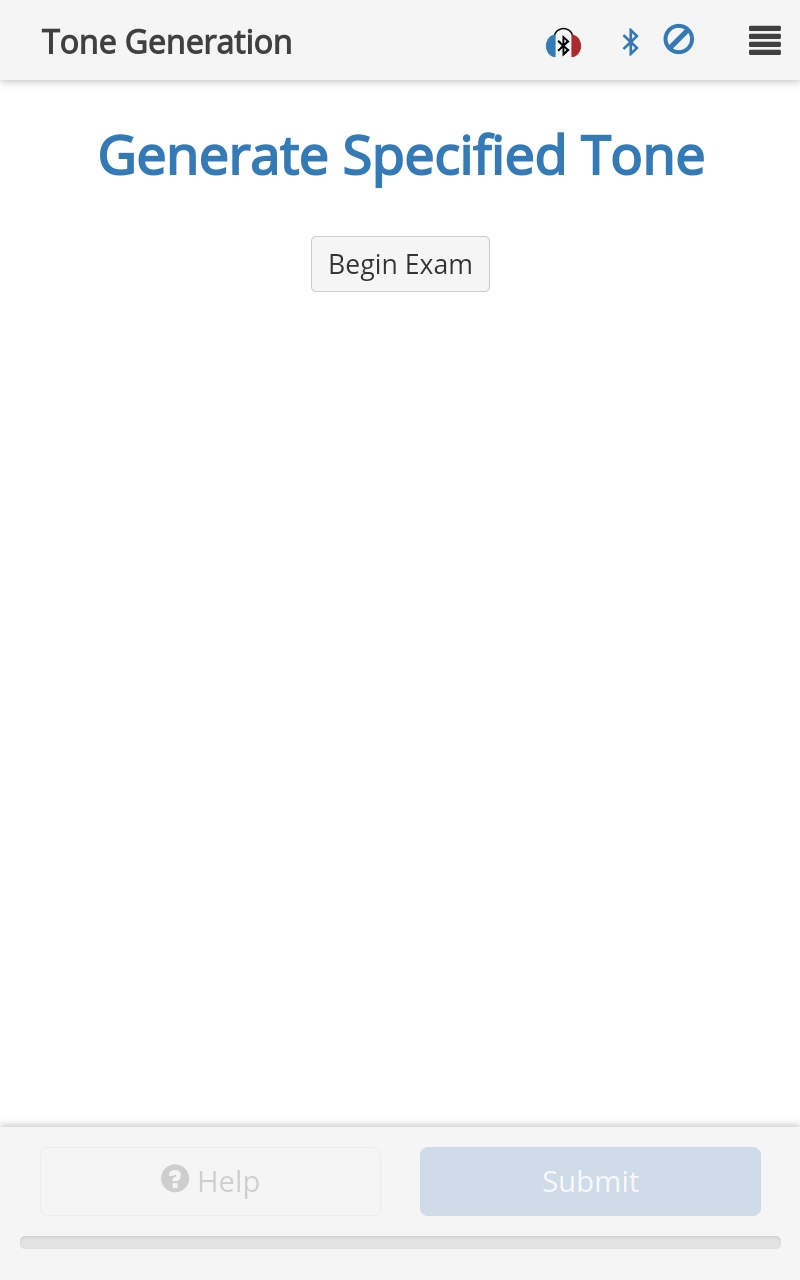

Manual Tone Generation Response Area

Use this response area to manually present different tones.

Protocol Example

{

"id": "ManualTones",

"title": "Manual Tone Generation",

"responseArea": {

"type": "chaManualToneGeneration",

"presentationList": [

{

"F": 2500,

"ToneDuration": 250,

"Level": 50

},

{

"F": 5000,

"ToneDuration": 250,

"Level": 50

}

]

}

}

Options

examInstructions:- Type:

string - Description: Replaces the top-level instruction text on the WAHTS exam pages (each page after starting page).

- Type:

minLevel:- Type:

number - Description: Minimum level the user can select for playing tones (hardware limited).

- Type:

maxLevel:- Type:

number - Description: Maximum level the user can select for playing tones (hardware limited).

- Type:

presentationList:Type:

arrayDescription: Array of available frequencies. Each array object may contain any of the Tone Generation Long Level Properties.

F:- Type:

integer - Description: Frequency of tone/center frequency of noise. (Maximum = 32000, Minimum = 1)

- Type:

commonPresentationProperties:- Type:

object - Description: This object may contain any of the properties from Tone Generation Long Level Properties

- Type:

Response

The result object from a chaManualToneGeneration response area contains only the common TabSINT responses. There are no results from the WAHTS.

Schema

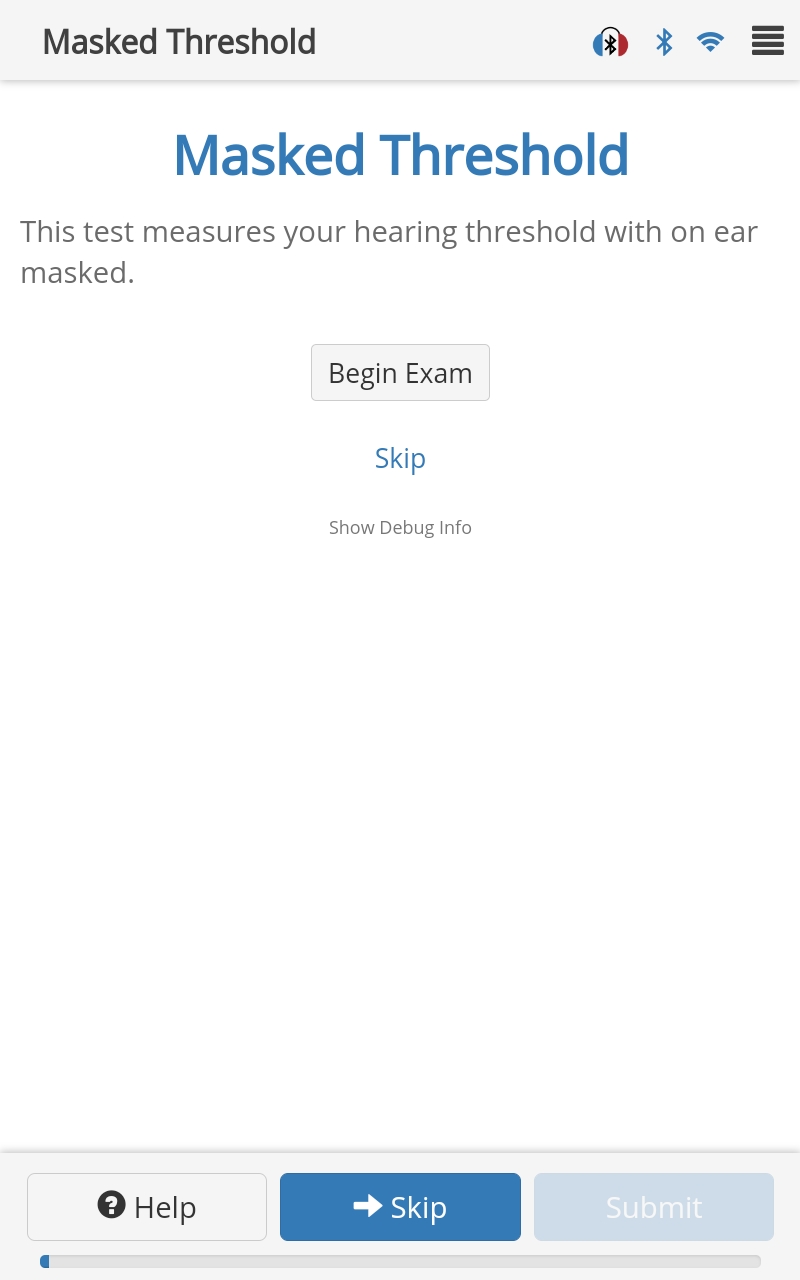

Masked Threshold Response Area

Use this response area to measure the threshold in one ear while masking the other ear with narrow band noise around a test frequency.

Protocol Example

{

"id": "MaskedThreshold",

"title": "Masked Threshold",

"questionMainText": "Masked Threshold",

"instructionText": "This test measures your hearing threshold with on ear masked.",

"responseArea": {

"type": "chaMaskedThreshold",

"examProperties": {

"F": 1000,

"TestEar": "Left",

"OutputChannel": "HPL0",

"OE": 40

}

}

}

Options

skip:- Type:

boolean - Description: If

true, allows the user to skip the exam. (Default =false)

- Type:

pause:- Type:

boolean - Description: If

true, allows the user to pause the current WAHTS exam. When paused, the user is returned to the 'start' page.

- Type:

autoSubmit:- Type:

boolean - Description: If

true, go straight to next page once this page is complete. (Default =false)

- Type:

autoBegin:- Type:

boolean - Description: If

true, go straight into the exam, without having to press the 'Begin' button. (Default =false)

- Type:

examInstructions:- Type:

string - Description: Replaces the top-level instruction text on the WAHTS exam pages (each page after starting page).

- Type:

hideExamProperties:- Type:

string - Description: Hide the parameters of the audiometry test (i.e. Frequency, Level, Ear) before and/or during a test. Default is to show the parameters before and during a test. Options are

before,during,alwaysornever.

- Type:

measureBackground:- Type:

string - Description: Method with which to measure the background noise after an audiometry exam. The option is

ThirdOctaveBands.

- Type:

examProperties:Type:

objectDescription: Properties defining the exam, including:

TestEar:- Type:

string - Description: Test ear cannot be determined by OutputChannel since the bone oscillator output channel is used for both ears.

- Type:

ThresholdLE:- Type:

number - Description: Left ear unmasked air conduction threshold output, in dB HL .(Default = 20)

- Type:

ThresholdRE:- Type:

number - Description: Right ear unmasked air conduction threshold output, in dB HL .(Default = 20)

- Type:

ThresholdBC:- Type:

number - Description: Unmasked bone conduction threshold output, in dB HL. (Default = null)

- Type:

F:- Type:

number - Description: Frequency of the test signal (Hz). (Default = 1000, Minimum = 500, Maximum = 8000)

- Type:

MaskingType:- Type:

string - Description: Masking method to apply where the options are

Auto,Optimized, andPlateau. (Default =Auto)

- Type:

StepSize:- Type:

number - Description: Increment the signal level by this amount, in dB. (Default = 5, Minimum = 1, Maximum = 10)

- Type:

MaskingStepSize:- Type:

number - Description: Increment the signal level by this amount, in dB. (Default = 5, Minimum = 1, Maximum = 10)

- Type:

TonePulseNumber:- Type:

integer - Description: Total number of tones played for each pulse train. (Default = 3, Minimum = 1, Maximum = 5)

- Type:

PollingOffset:- Type:

integer - Description: Period beyond last pulse where subject response still accepted, in ms. Enforced on the CHA: PollingOffset <= MinISI <= MaxISI. (Default = 600, Minimum = 0, Maximum = 2000)

- Type:

OutputChannel:- Type:

string - Description: Channel on which to output the test signal. Note the LINE channel must select the DAC opposing the MaskingChannel where the options are

HPL0,HPR0,HPL1orHPR1. (Default =HPL0)

- Type:

OE:- Type:

number - Description: Occlusion effect to account for when the OutputChannel is set to the bone oscillator (LINEL0). (Default = 0, Minimum = 0, Maximum = 80)

- Type:

Response

The chaMaskedThreshold response area generates an array of presentation levels presented during the test, an array of masking levels presented during the test, and an array indicating a response (1) or no response (0) received for each presentation.

Schema

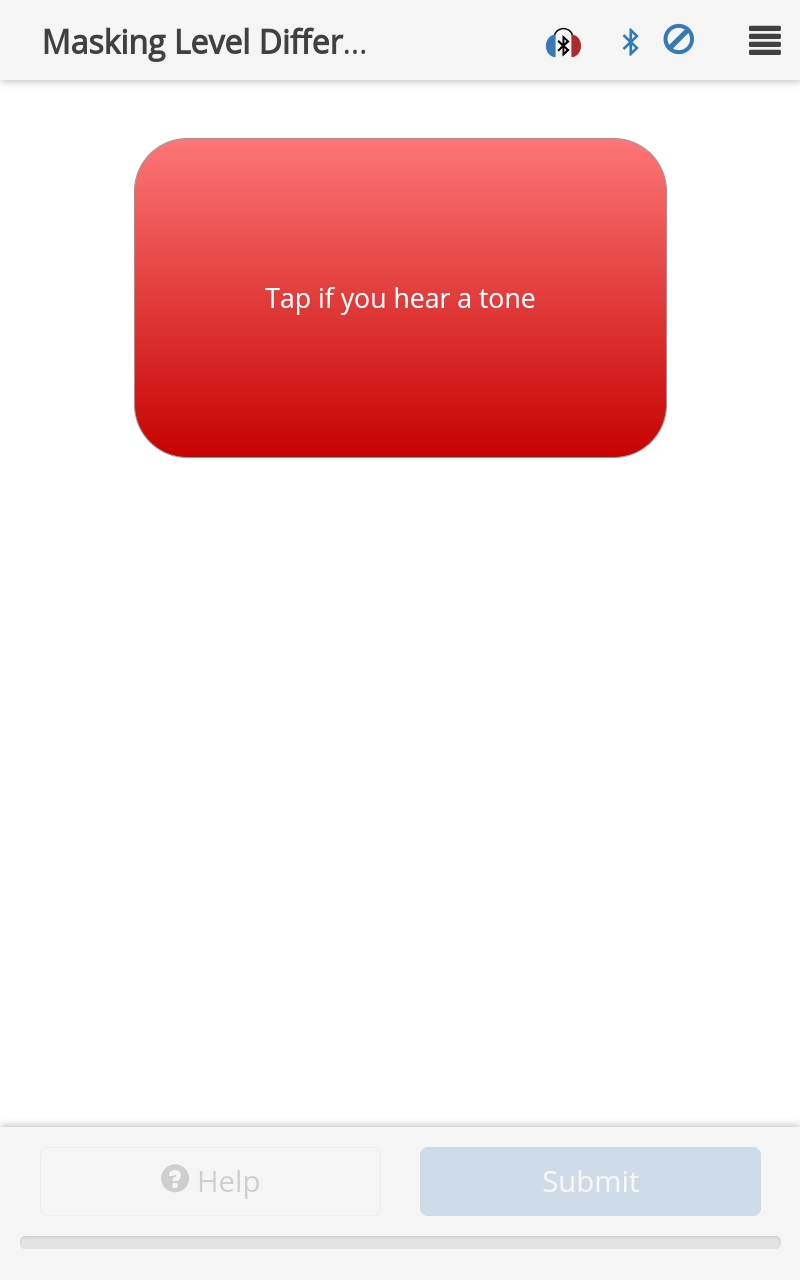

MLD Response Area

Use this response area to run a Masking Level Difference (MLD) exam.

Protocol Example

{

"id": "chaMLD",

"title": "Masking Level Difference Response Area",

"responseArea": {

"type": "chaMLD",

"examProperties": {

"UseSoftwareButton": true,

"RequireResponse": false,

"StopOnResponse": true

}

}

}

Options

skip:- Type:

boolean - Description: If

true, allows the user to skip the exam. (Default =false)

- Type:

pause:- Type:

boolean - Description: If

true, allows the user to pause the current WAHTS exam. When paused, the user is returned to the 'start' page.

- Type:

autoSubmit:- Type:

boolean - Description: If

true, go straight to next page once this page is complete. (Default =false)

- Type:

autoBegin:- Type:

boolean - Description: If

true, go straight into the exam, without having to press the 'Begin' button. (Default =false)

- Type:

examInstructions:- Type:

string - Description: Replaces the top-level instruction text on the WAHTS exam pages (each page after starting page).

- Type:

hideExamProperties:- Type:

string - Description: Hide the parameters of the audiometry test (i.e. Frequency, Level, Ear) before and/or during a test. Default is to show the parameters before and during a test. Options are

before,during,alwaysornever.

- Type:

measureBackground:- Type:

string - Description: Method with which to measure the background noise after an audiometry exam. The option is

ThirdOctaveBands.

- Type:

examProperties:Type:

objectDescription: Properties defining the exam, including:

Frequency:- Type:

number - Description: Frequency of the target tone (Hz), range is set by calibration. (Default = 500)

- Type:

ToneDuration:- Type:

number - Description: Duration of each burst (ms) of the target tone in the pulse train. (Default = 300, Minimum = 100, Maximum = 500)

- Type:

ToneRamp:- Type:

number - Description: Duration of target tone (ms) and masker noise ramp up and down. (Default = 20, Minimum = 20, Maximum = 50)

- Type:

TonePulseNumber:- Type:

number - Description: Number of tones in a pulse train. (Default = 5, Minimum = 1, Maximum = 5)

- Type:

InterToneDuration:- Type:

number - Description: Duration of the periods of silence (ms) in the signal portion before the first tone burst, between subsequent tone bursts, and after the last tone burst. (Default = 300, Minimum = 100, Maximum = 500)

- Type:

FixedSignal:- Type:

boolean - Description: If

true, the level of the signal is fixed and the desired SNR is achieved by adjusting the level of the masker. Iffalse, the masker is fixed instead. (Default =false)

- Type:

FixedLevel:- Type:

number - Description: Sound pressure level (dB SPL) of the fixed material. The SPL of the other material is set by the SNR. (Default = 70, Minimum = 20, Maximum = 85)

- Type:

Adaptive:- Type:

boolean - Description: If

true, use the adaptive algorithm. (Default =false)

- Type:

UseSoftwareButton:- Type:

boolean - Description: If

true, use a host-generated submission in response to a presentation. (Default =true)

- Type:

RequireResponse:- Type:

boolean - Description: If

true, wait for a user response. Iffalse, assume a negative response if no response is received. (Default =true)

- Type:

StopOnResponse:- Type:

boolean - Description: If

true, the presentation playback ceases once a positive response is obtained (similar to the Hughson-Westlake implementation). (Default =false)

- Type:

TimePause:- Type:

number - Description: Length of time (ms) before the next presentation after a response. (Default = 1000, Minimum = 0, Maximum = 1000)

- Type:

ResponseWindow:- Type:

number - Description: Length of time (ms) to wait for a response when

RequireResponseisfalsebefore moving on. (Default = 1000, Minimum = 0, Maximum = 1000)

- Type:

UseNoTone:- Type:

boolean - Description: If

true, randomly present presentations with no target tone to catch false positives. (Default =true)

- Type:

NMaxFalsePositives:- Type:

number - Description: Number of false positives that will be tolerated before aborting the exam. (Default = 1, Minimum = 1, Maximum = 40)

- Type:

MaskerBandpass:- Type:

array - Description: Array indicating the lower and upper cut-off frequencies for the masker bandpass filter. Range set by calibration. (Default = [200,800])

- Type:

ReferenceSignalEar:- Type:

number - Description: Channel to use for the target tone during the reference condition, where 0 = left, 1 = right, and 2 = both. (Default = 2)

- Type:

ReferenceSignalPhase:- Type:

number - Description: Phase (in degrees) of the target tone delivered to the right channel. This parameter is only used if ReferenceSignalEar = 2 for the reference condition. (Default = 0, Minimum = 0, Maximum = 359)

- Type:

ReferenceMaskerEar:- Type:

number - Description: Channel to use for the masker noise during the reference condition, where 0 = left, 1 = right, and 2 = both. (Default = 2)

- Type:

ReferenceMaskerPhase:- Type:

number - Description: Phase (in degrees) of the masker delivered to the right channel. This parameters is only used if ReferenceMaskerEar = 2 for the reference condition. The valid options are (Default = 0):

0: deliver the exact same noise to both ears180: invert the masker at the right ear-1: generate new random noise for the right ear; other values invalid

- Type:

ReferenceInitialSNR:- Type:

number - Description: SNR of the first presentation at the reference condition. (Default = 1, Minimum = -15, Maximum = 10)

- Type:

ReferenceNPresentations:- Type:

number - Description: Number of presentations for the reference condition. (Default = 10, Minimum = 5, Maximum = 50)

- Type:

ReferenceStepSize:- Type:

number - Description: The increment or decrement (in SPL) between presentations of the reference condition. (Default = 2, Minimum = 0, Maximum = 5)

- Type:

TargetSignalEar:- Type:

array - Description: Channel(s) to use for the target tone during the target condition(s), where 0 = left, 1 = right, and 2 = both. (Default = [2])

- Type:

TargetSignalPhase:- Type:

array - Description: Phase(s) of the target tone delivered to the right channel. This parameter is only used if TargetSignalEar = 2 for the target condition(s). (Default = [0], Minimum = 0, Maximum = 359)

- Type:

TargetMaskerEar:- Type:

array - Description: Channel(s) to use for the masker noise during the target condition(s), where 0 = left, 1 = right, and 2 = both. (Default = [2])

- Type:

TargetMaskerPhase:- Type:

array - Description: Phase(s) of the masker delivered to the right channel. This parameter is only used if TargetMaskerEar = 2 for the target condition(s). The valid options are (Default = [0]):

0: deliver the exact same noise to both ears180: invert the masker at the right ear-1: generate new random noise for the right ear; other values invalid

- Type:

TargetInitialSNR:- Type:

array - Description: SNR(s) of the first presentation(s) during the target condition(s). (Default = [-7], Minimum = -15, Maximum = 10)

- Type:

TargetNPresentations:- Type:

array - Description: Number(s) of presentations for the target condition(s) (Default = [11], Minimum = 5, Maximum = 50)

- Type:

TargetStepSize:- Type:

array - Description: For each target condition, the increment or decrement (in SPL) to use between presentations of that condition. (Default = [2], Minimum = 0, Maximum = 5)

- Type:

Response

The chaMLD response area generates a result object for each presentation. Each result object contains:

result.State = "DONE" // String representing the exam state (PLAYING, WAITING_FOR_RESULT, BETWEEN or DONE)

result.ResultType = "SUCCESS" // String reporting "FAIL" if no positive responses or too many false positives, otherwise "SUCCESS"

result.FailureType = "" // String indicating reason for a "FAIL" (only provided if ResultType is "FAIL")

result.Condition = "REFERENCE" // Condition of the current presentation ("REFERENCE", "TARGET" or "NO_TONE")

result.CurrentConditionCount = 10 // Counter for the current condition or sub-condition

result.FalsePositiveCounter = 0 // Current number of false positives

result.TargetIndex = -999 // Index of sub-condition (during TARGET condition, otherwise it is -999)

result.ActualFrequency = 500 // Actual frequency (Hz) of the target tone

result.CurrentSNR = -17 // SNR of the current condition or sub-condition

result.TargetThreshold = [-28,0,0,0] // Calculated SNR threshold(s) for the Target condition(s) (only valid in state DONE)

result.MLD = [10,0,0,0] // Calculated MLD(s) for each of the Target condition(s) (only valid in state DONE)

result.ReferenceSNRArray = [1,-1, ...] // Array of SNRs of each presentation during the Reference condition

result.TargetSNRArray = [-7,-9, ...] // 2-D Array of SNRs of each presentation during the Target condition(s)

result.ReferenceHitOrMiss = [true,true, ...] // Array of subject responses to each presentation during the Reference condition

result.TargetHitOrMiss = [false,true, ...] // 2-D Array of subject responses to each presentation during the Target condition(s)

result.ReferenceThreshold = -18 // Calculated SNR threshold for the Reference condition (only valid in state DONE)

userResponses.Condition = ["REFERENCE","TARGET", ...] // Array of conditions presented (REFERENCE,TARGET or NO_TONE)

userResponses.Response = [true,true, ...] // Array of user responses to the presentations

Schema

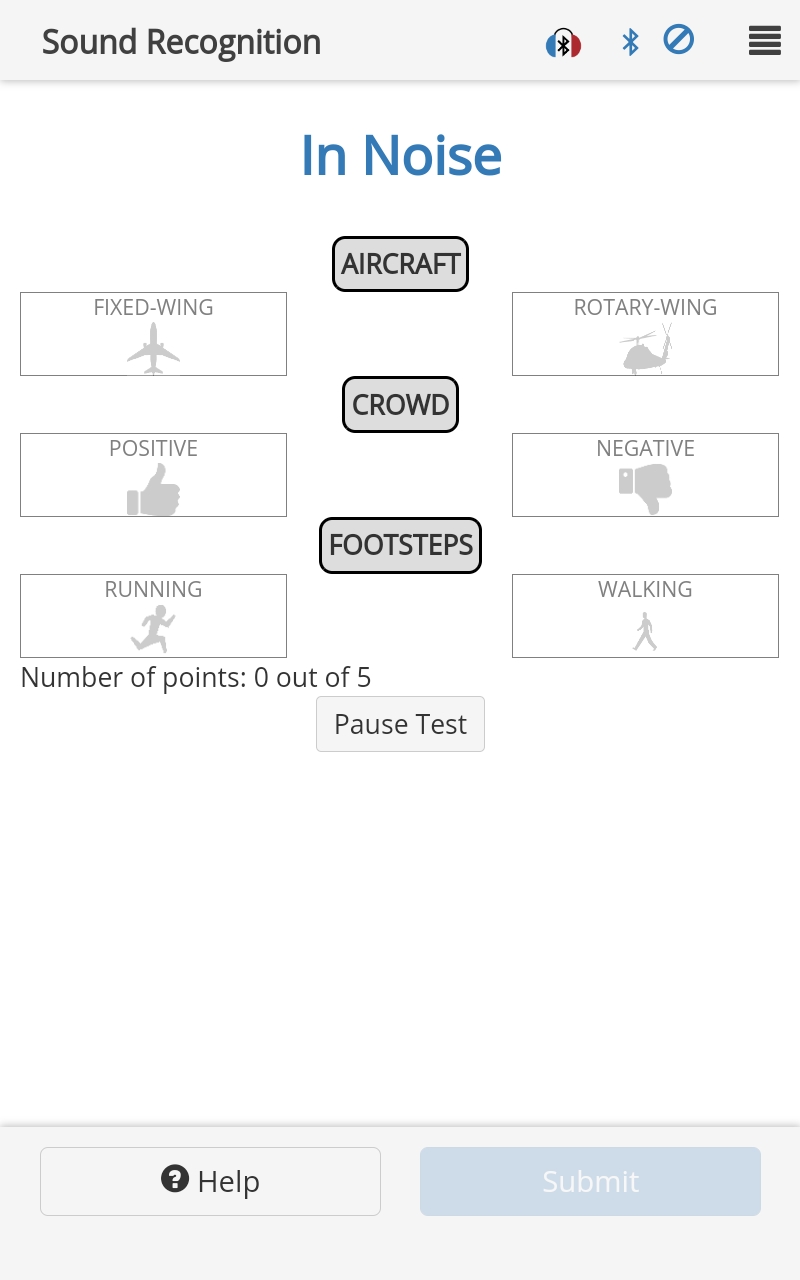

Sound Recognition Response Area

This response area is deprecated as of TabSINT version 4.4.0.

Use this response area to run a Sound Detection test.

Protocol Example

{

"id": "SoundRecognition",

"title": "Sound Recognition",

"questionMainText": "In Noise",

"helpText": "This test measures your ability to detect sounds in background noise.",

"hideProgressBar":true,

"responseArea": {

"type": "chaSoundRecognition",

"categories": [

{

"name": "AIRCRAFT",

"soundClasses": [

{

"name": "FIXED-WING",

"imgPath": "fixed-wing.jpg",

"wavfiles": [

{"path":"C:USER/SRIN/Aircraft/Jet/A-J-0001.wav"},

{"path":"C:USER/SRIN/Aircraft/Jet/A-J-0002.wav"},

{"path":"C:USER/SRIN/Aircraft/Jet/A-J-0003.wav"}

]

},

{

"name": "ROTARY-WING",

"imgPath": "rotary-wing.gif",

"wavfiles": [

{"path":"C:USER/SRIN/Aircraft/Rotor/A-R-0001.wav"},

{"path":"C:USER/SRIN/Aircraft/Rotor/A-R-0002.wav"},

{"path":"C:USER/SRIN/Aircraft/Rotor/A-R-0003.wav"}

]

}

]

},

{

"name": "CROWD",

"soundClasses": [

{

"name": "POSITIVE",

"imgPath": "thumbs up.png",

"wavfiles": [

{"path":"C:USER/SRIN/Crowd/Positive/C-P-0001.wav"},

{"path":"C:USER/SRIN/Crowd/Positive/C-P-0002.wav"}

]

},

{

"name": "NEGATIVE",

"imgPath": "thumbs down.png",

"wavfiles": [

{"path":"C:USER/SRIN/Crowd/Negative/C-N-0001.wav"},

{"path":"C:USER/SRIN/Crowd/Negative/C-N-0002.wav"}

]

}

]

},

{

"name": "FOOTSTEPS",

"soundClasses": [

{

"name": "RUNNING",

"imgPath": "running.PNG",

"wavfiles": [

{"path":"C:USER/SRIN/Footsteps/Running/F-R-0001.wav"},

{"path":"C:USER/SRIN/Footsteps/Running/F-R-0002.wav"}

]

},

{

"name": "WALKING",

"imgPath": "walking.jpg",

"wavfiles": [

{"path":"C:USER/SRIN/Footsteps/Walking/F-W-0001.wav"},

{"path":"C:USER/SRIN/Footsteps/Walking/F-W-0002.wav"}

]

}

]

}

],

"startSNR": -10,

"stepSizeSNR": 3,

"pointsGoal": 5,

"pointsAwardedForMaxedOutTrial": 0,

"pointsAwardedForWrongCategory": 0,

"backgroundNoiseLevel": 50,

"pause": true,

"nTrialsWithoutResponsePause": 1,

"noResponseMessage": "<div>It looks like you have not pressed any buttons in a while.</div><br><br><div>If you are letting it time out because you cannot recognize the sounds, press 'RESUME' to continue.</div><br><br><div>If the test does not seem to be working, see the test administrator for help.</div>",

"incorrectMessageInitial": "It looks like you are choosing some incorrect answers. Remember, only choose an answer if you are sure.",

"incorrectMessageRepeat": "It looks like you are still choosing some incorrect answers. See the test administrator for help."

}

}

Options

categories:Type:

arrayDescription: An array of objects defining the categories for the exam (must contain at least 1 object). Each object contains:

name:- Type:

string - Description: Name of category.

- Type:

soundClasses:Type:

arrayDescription: A 2-element array of objects defining the sound classes within the category. Each object contains:

name:- Type:

string - Description: Name of sound class.

- Type:

imgPath:- Type:

string - Description: Relative path of the image to display for the sound class.

- Type:

wavfiles:Type:

arrayDescription: An array of objects defining the wav files for the sound class. Each object can contain:

path:- Type:

string - Description: Path to the wav file on the CHA, for example "C:USER/SRIN/Aircraft/Jet/A-J-0001.wav" (required).

- Type:

playbackLevelAdjustment:- Type:

number - Description: Allows fine tuning of wav file playback levels, where playback level = level +

playbackLevelAdjustment(optional).

- Type:

startSNR:- Type:

integer - Description: Starting SNR. Starting level =

backgroundNoiseLevel+startSNR. (Default = -15, Minimum = -30, Maximum = 30)

- Type:

stepSizeSNR:- Type:

integer - Description: Increase playback level by this amount (dB) each time the subject does not hear the sound. (Default = 1, Minimum = 0, Maximum = 10)

- Type:

maxSNR:- Type:

integer - Description: Maximum SNR to be presented. Maximum level is